How Much Does CoreWeave Cost? GPU Pricing Guide (December 2025)

CoreWeave has carved out a solid reputation in the enterprise GPU cloud space, but their à la carte pricing model creates some real headaches when you're trying to budget for AI projects. Most developers get shocked when they realize the true cost of running workloads goes way beyond the advertised GPU rates. Meanwhile, simpler alternatives are delivering the same hardware performance at dramatically lower prices with none of the configuration complexity.

I'll show you the real costs behind CoreWeave's pricing structure, break down what you'll actually pay for common AI workloads, and compare it against more affordable GPU cloud alternatives that won't break your budget.

TLDR:

- CoreWeave charges $2.21/hr for A100 GPUs (80GB) plus separate CPU/RAM costs, usually totaling $3/hr or more for a usable instance.

- The à la carte pricing model requires technical expertise and can lead to unpredictable final costs if not careful.

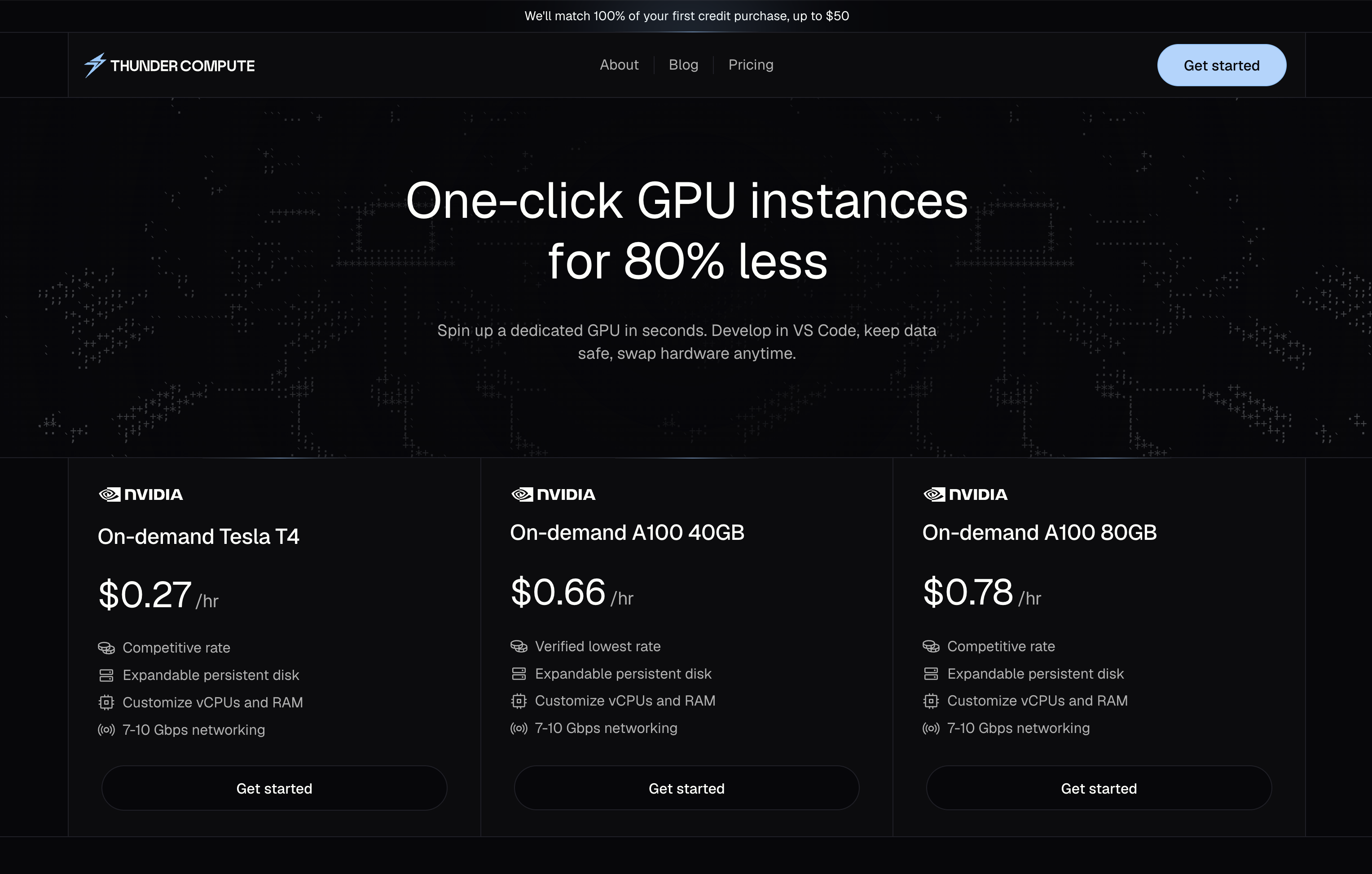

- Thunder Compute offers A100 80GB instances starting at ~$0.78/hr with 4 vCPUs and 32GB RAM included.

- CoreWeave mainly targets enterprise & orchestration-heavy users, while Thunder Compute is purpose-built for individual developers and direct pay-as-you-go workloads.

- You can save about 70% on GPU costs by choosing Thunder Compute over CoreWeave’s complex, piecemeal pricing model.

What is CoreWeave and How Does it Work?

CoreWeave is a leading GPU cloud provider specializing in infrastructure for AI and HPC workloads. The company offers flexible, high-performance computing with extensive support for Kubernetes, Slurm, and bare-metal orchestration, making it ideal for machine learning at scale.

The CoreWeave business model centers on giving users access to NVIDIA's latest GPUs, including A100, H100, Blackwell/GB200, and others, via highly configurable cloud instances. Pretty flexible, right?

Customers can tailor GPU, CPU, RAM, and storage resources precisely to fit each workload, whether for training, inference, or simulation.

Originally launched as a Kubernetes-native service, CoreWeave now provides full-stack infrastructure for both enterprise and research, supporting both containerized (Kubernetes) and bare-metal (direct instance) workloads.

Their focus on near bare-metal performance and advanced orchestration attracts teams building large-scale ML pipelines and supercomputing applications.

Users pay only for what they use, can scale clusters up or down in real time, and avoid long-term hardware commitments. This model and its variety of orchestration tools allow organizations of all sizes to efficiently match resources with changing project demands.

CoreWeave Features

CoreWeave offers a complete range of GPU cloud services designed for AI workloads. The service provides access to a vast portfolio of NVIDIA GPUs, all running on bare metal infrastructure for maximum performance.

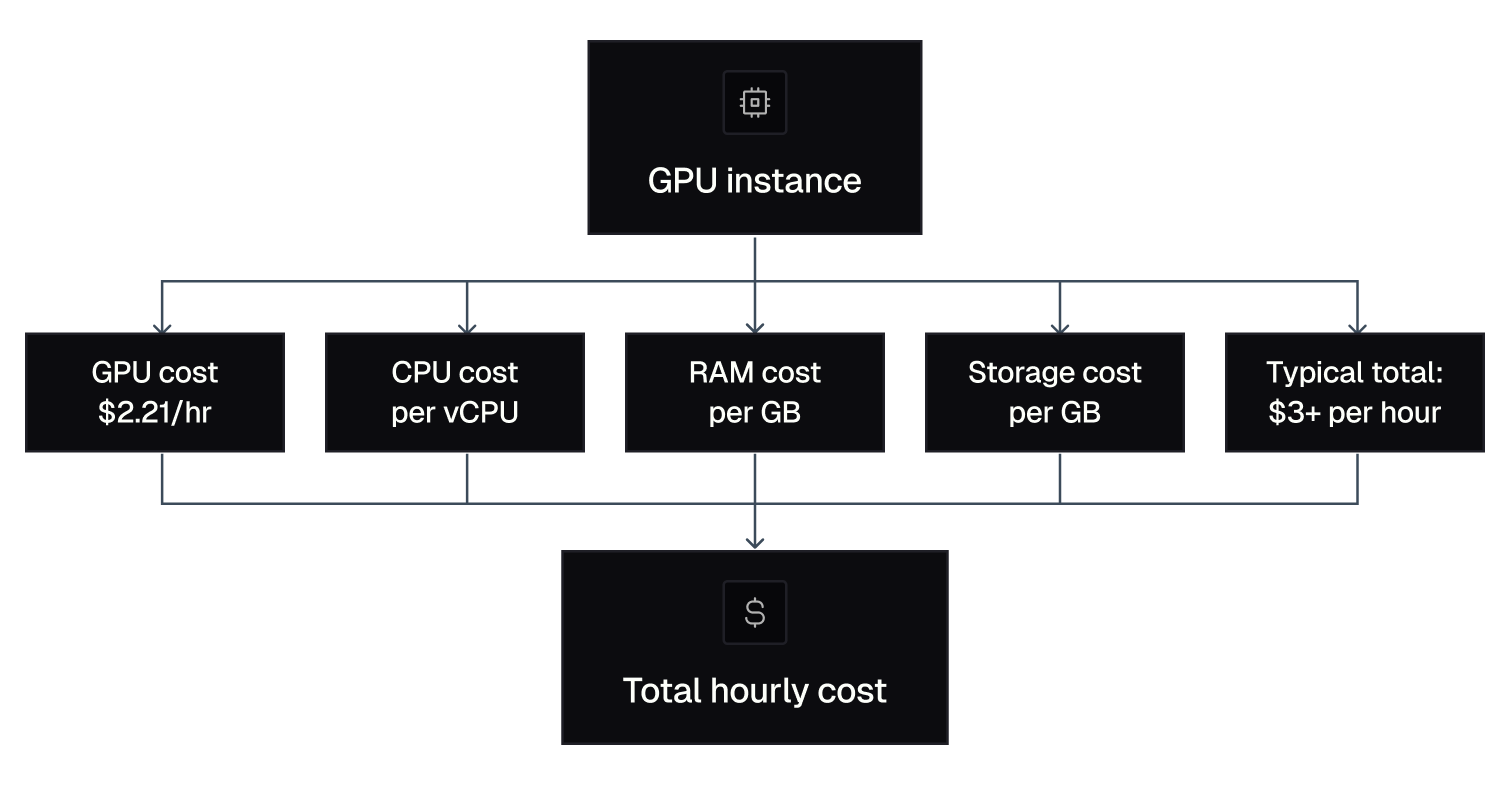

The pricing structure uses an à la carte model where the total instance cost combines a GPU component with the number of vCPUs and the amount of RAM allocated. CPU and RAM costs remain consistent per base unit, with the GPU selection being the primary variable affecting overall pricing.

CoreWeave's Kubernetes-native deployment options allow for sophisticated orchestration of AI workloads. The service includes access to different NVIDIA GPU models, from older generations suitable for development work to the latest H100 chips for production training runs.

CoreWeave offers up to 60% discounts over On-Demand prices for committed usage, making it more cost-effective for predictable workloads.

The infrastructure includes cutting-edge networking and storage features designed to support the high-throughput requirements of modern AI applications. For organizations comparing options, our GPU cloud guide provides additional context on choosing the right provider.

When comparing GPU pricing across providers, it's worth checking our A100 GPU pricing comparison to understand how CoreWeave compares to its alternatives.

CoreWeave GPU Pricing Models

CoreWeave uses a flexible pricing structure that allows customization of GPU, CPU, RAM, and storage based on user needs. The pricing varies greatly depending on the GPU type selected and additional resources required.

CoreWeave’s published H100 PCIe price is $4.25/hr (GPU component only), and full-node HGX setups start around $49.24/hr for 8-GPU nodes (~$6.15 per GPU when bundled with CPU/RAM).

The à la carte model means users must factor in additional costs for CPU cores, RAM, and storage beyond the base GPU pricing.

| GPU Model | Price per Hour | Configuration |

|---|---|---|

| H100 HGX | ~$6.16 | Base instance |

| H100 PCIe | $4.25 | Single GPU |

| A100 80GB | $2.21 | NVLINK variant |

| 8x H100 | $49.24 | Multi-GPU setup |

Look, the pricing model requires technical expertise to optimize costs effectively. Users must configure CPU, RAM, and storage separately, which can lead to unexpected expenses if not properly planned.

For developers seeking more budget-friendly options, Thunder Compute offers A100 instances at much lower rates. Our pricing page shows transparent, all-inclusive rates that eliminate the complexity of à la carte configuration.

CoreWeave Cost Examples and Real-World Scenarios

CoreWeave’s costs can vary widely based on GPU type, configuration, and resource allocation. As of December 2025, the H100 PCIe is offered at $4.25 per GPU per hour. Full 8xH100 HGX nodes cost $6.15 per GPU when bundled with CPU/RAM. A100 80GB NVLINK instances are available at $2.21/hr for the GPU alone.

For a typical machine learning development scenario, a single A100 80GB instance costs $2.21/hr before factoring in mandatory CPU, RAM, and storage charges. A common setup, for example, 8 vCPUs and 64GB RAM, can push the total hourly rate beyond $3, especially after including storage and network egress.

And larger training jobs? They scale up costs rapidly. For example, a 4xA100 NVLINK configuration at CoreWeave's rates might be listed around $8.84/hr just for GPUs, but additional resources can easily raise the final instance cost to $14-$16/hr. A 24-hour training session at $14.69/hr would cost more than $352, not accounting for storage and data transfer fees.

Fair warning: Compared to hyperscale providers, CoreWeave's list pricing is often far more competitive; for instance, Microsoft Azure and AWS typically charge $5-$7/hr or more for equivalent H100 GPUs, making CoreWeave between 30-60% less expensive for on-demand GPU compute.

CoreWeave Key Limitations and Gaps

CoreWeave is primarily designed for enterprise-scale workloads and often requires substantial budgets. The hourly costs for H100 ($4.25+) and A100 ($2.21+) instances add up quickly, making sustained access difficult for individual developers and small labs.

CoreWeave’s minimum instance requires 1 GPU, at least 1 vCPU, and a small root disk (40GB+), with RAM configured separately. The exact minimum RAM depends on GPU type and region.

The platform is less accessible for students, solo developers, or small teams who need affordable, low-friction GPU access for prototyping or coursework. The minimum resource requirements and an enterprise-first billing model create barriers for rapid iteration and experimentation typical of smaller projects.

The setup and management process can also be daunting for non-experts. (Been there? Yeah, it's not fun.)

Deploying and optimizing instances frequently requires knowledge of Kubernetes, cloud orchestration, or cluster configuration, skills that are not universal among typical developers or researchers.

Unlike developer-first competitors, CoreWeave does not emphasize features such as one-click instance creation, VS Code plugins, or streamlined UI for instant launch and job management. This can make agile or exploratory GPU work more challenging for those without cloud ops backgrounds.

Best CoreWeave Alternatives

Thunder Compute stands out as the best CoreWeave alternative, offering A100 80 GB GPUs at ~$0.78/hr (4 vCPUs & 32 GB RAM included) compared to CoreWeave's $2.21/hr price for just the GPU component. Thunder Compute provides one-click deployment, VS Code integration, and persistent storage without the complexity of CoreWeave's enterprise-focused configuration requirements.

For developers seeking affordable, accessible GPU computing, Thunder Compute delivers the same powerful hardware at a fraction of the cost with a much simpler user experience. The all-inclusive pricing eliminates surprise charges and configuration complexity.

Speaking of alternatives, here's what else is out there in the GPU cloud space:

- RunPod: Offers competitive pricing but lacks the developer experience features and availability

- Lambda Labs: Provides good performance but with higher costs than Thunder Compute

- Vast.ai: Marketplace model with mixed reliability

- AWS/Google Cloud/Azure: Enterprise-grade but expensive for individual developers

- Paperspace: User-friendly but limited GPU selection

Thunder Compute's advantage over these alternatives is its combination of lowest-in-market pricing with developer-focused features. You get enterprise-grade GPUs with the simplicity needed for rapid development and prototyping.

Most alternatives either sacrifice cost-effectiveness or ease of use. Thunder Compute delivers both, making it the ideal choice for developers who need powerful GPU computing without enterprise complexity or pricing.

FAQ

Q: How much does CoreWeave actually cost compared to other GPU providers?

A: CoreWeave charges $2.21/hr for an A100 80GB GPU alone. When you add required CPU, RAM, and storage, the typical hourly total exceeds $3.00. By contrast, Thunder Compute offers the same A100 hardware for $0.66/hr with CPU, RAM, and storage included, representing about 70% cost savings for most users.

Q: What makes CoreWeave's pricing so complex to understand?

A: CoreWeave uses an à la carte model where you pay for GPU, CPU, RAM, and storage separately. This requires users to configure and track each resource, making the true total cost unpredictable, unlike all-inclusive providers with flat hourly rates.

Q: When should I consider CoreWeave over simpler alternatives?

A: CoreWeave is ideal for large enterprises or teams needing advanced Kubernetes-native deployment, custom HPC setups, or specific bare-metal performance. These organizations usually have the technical expertise and budgets for flexible, high-throughput clusters. Smaller teams and individual developers usually prefer simpler, more affordable platforms that don’t require complex configuration.

Q: Can I run a basic machine learning project affordably on CoreWeave?

A: A basic ML project needing one A100 for 24 hours will generally cost around $72 on CoreWeave (including required CPU/RAM/storage). By comparison, Thunder Compute would charge under $16 for the same workload with all resources included, making it far more economical for prototypes or experiments.

Q: What's the minimum configuration required to run a CoreWeave instance?

A: For a valid instance, CoreWeave requires at least 1 GPU, 1 vCPU, 2GB RAM, and 40GB NVMe root disk storage. Each element is billed separately, and increasing any component will increase the overall cost.

Final thoughts on CoreWeave GPU pricing

CoreWeave's enterprise focus and complex pricing structure make it a tough choice for most developers who just want affordable GPU access. The à la carte model might work for large companies with dedicated infrastructure teams, but individual developers end up paying premium prices for unnecessary complexity. Thunder Compute delivers the same A100 performance at a fraction of the cost with none of the configuration headaches. Your GPU budget can go much further when you skip the enterprise overhead and focus on what matters: getting your AI projects built.