For most AI developers, the choice between Google Cloud Platform (GCP) and Thunder Compute comes down to cost versus complexity. GCP locks you into premium enterprise services at premium prices, while Thunder Compute makes GPU access simple and up to 87% cheaper. The real decision isn’t about who has the bigger ecosystem, it’s whether you’d rather burn budget on overhead or spend it training models.

In this article, we'll explain what you need to know about Thunder Compute and Google Cloud, in terms of pricing, setup, and performance, so you can choose the best GPU cloud platform that fits your AI development workflow.

TLDR:

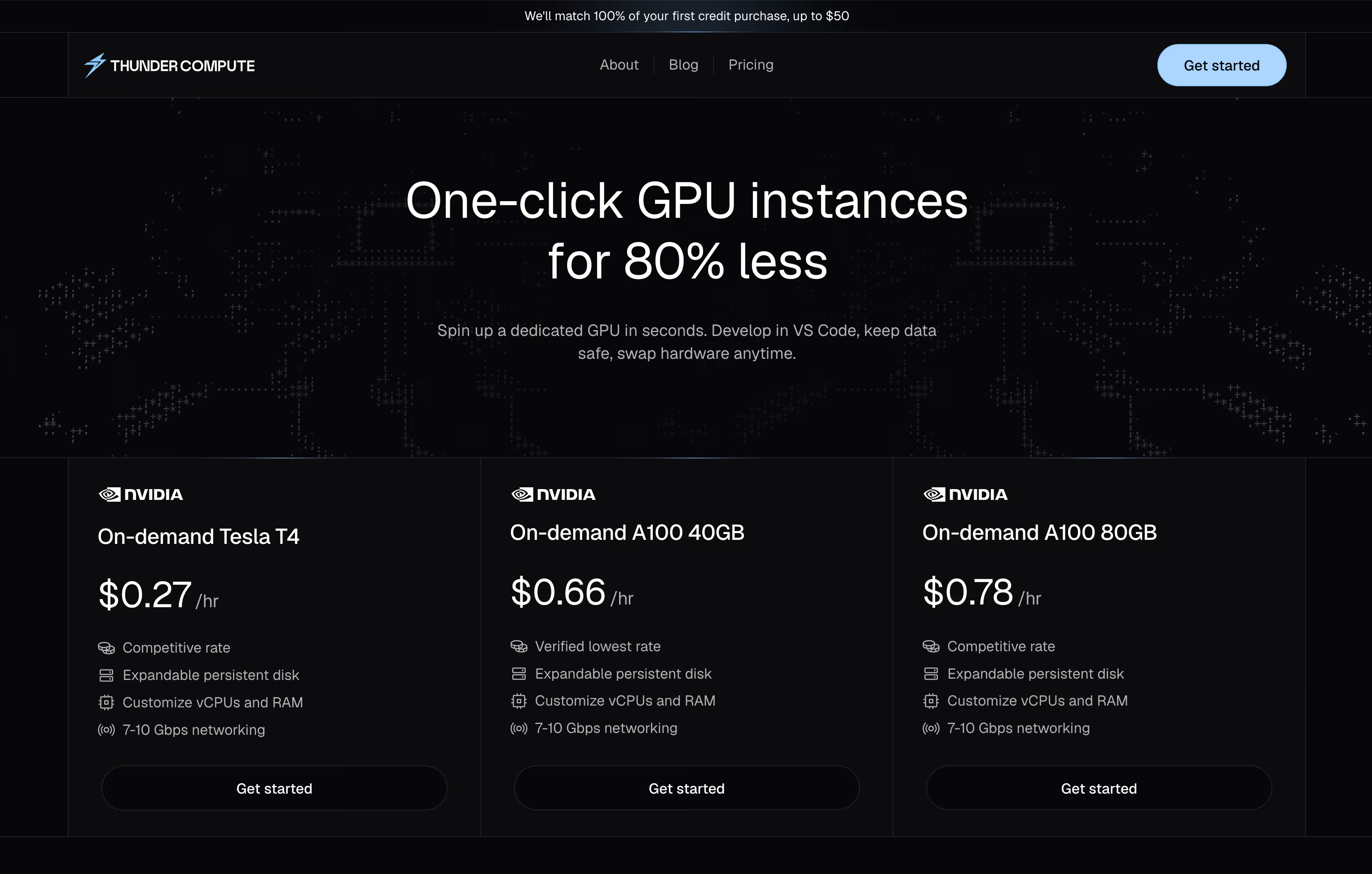

- GPUs cost 80% to 87% less on Thunder Compute than on GCP (A100 at $0.78 per hour vs $3.50 per hour on GCP).

- You can launch GPU instances in 30 seconds on Thunder Compute with VS Code integration, compared with days of setup on GCP.

- GCP requires quota approvals and complex configuration; Thunder offers one-click deployment.

- Thunder delivers five to seven times more GPU hours than traditional cloud providers, at the same cost.

What GCP Does and Their Approach

GCP takes the traditional hyperscaler approach to GPU computing. They offer GPU instances through Compute Engine with options like A100, H100, T4, and L4 GPUs across different machine configurations.

GCP's strategy revolves around integration with their broader ecosystem. You get access to services like Vertex AI, BigQuery, and their managed ML tools. The idea is that you'll use their GPUs as part of a larger Google Cloud workflow.

Their GPU offerings come in predefined machine types, from single GPUs up to 16 A100s in a single VM. The focus is on enterprise customers who need that level of integration and are willing to pay premium prices for it.

Google Cloud Platform positions itself as a complete solution in which GPU compute is just one piece of a larger enterprise puzzle.

But here's where it gets tricky. GCP's approach assumes that you want to buy into their entire ecosystem. If you just need GPU cloud resources for training models, you're paying for a lot of services you might never use.

What Thunder Compute Does and Our Approach

We built Thunder Compute for developers who want powerful GPU access without the complexity or cost of traditional cloud providers. Our approach is simple: We give you the best GPU experience at the lowest possible price.

Thunder Compute offers on-demand GPU instances that you can launch in seconds. We support NVIDIA T4, A100 40GB, A100 80GB, and H100 GPUs with up to four GPUs per instance. The key difference is how we've designed the entire experience around developer productivity.

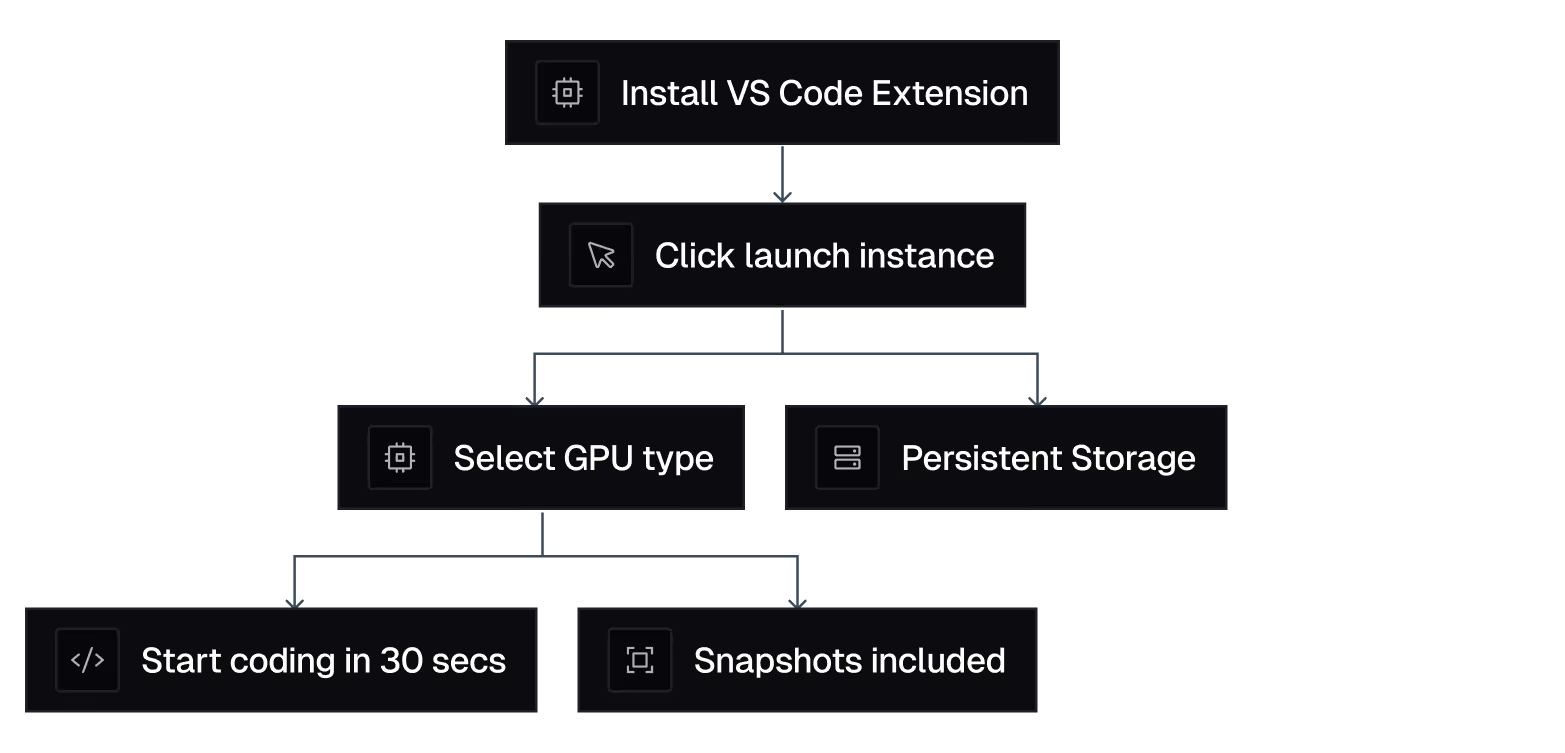

Our VS Code integration means that you can connect directly to your GPU instance and start coding right away: no SSH setup, no driver installation, no configuration headaches. You click a button, and you're writing code on a cloud GPU.

We also include features that other providers charge extra for. Persistent storage comes standard, so your data and environment persist between sessions. You can take snapshots of your entire setup and restore them later. Need more power? Swap your GPU type with one click.

The least-costly cloud GPU providers often sacrifice reliability or features to hit low prices. We've taken a different approach by building software that gets the most from hardware, letting us pass those savings to you.

Our pricing model is transparent: you pay per minute for what you use. It's that simple.

Pricing and Cost Comparison

The difference becomes crystal clear when you look at pricing.

GCP charges around $11 per hour for each H100 GPU when you factor in the required VM costs (prices vary based on several factors including region and demand). Their eight-GPU H100 instances run about $88.49 per hour. For A100 80GB instances, you're looking at roughly $3 to $4 per GPU hour depending on the machine type.

Thunder Compute offers 80GB A100s at $0.78 per hour and H100 instances at $1.89 per hour all-in. That's not a typo.

But GCP's pricing gets worse when you add up the hidden costs. You pay separately for the VM compute, storage, networking, and data egress. Those "small" charges add up fast when you're training AI models regularly.

Thunder Compute keeps pricing simple by bundling high-speed networking, snapshots, and developer tools in the quoted rate, with straightforward storage options and no complex add-on fees like GCP.

For a typical AI startup running experiments and training models, this cost savings can mean the difference between burning through funding on infrastructure and investing it in product development.

The math is simple: You get five to seven times more GPU hours for the same cost with Thunder Compute.

Setup Complexity and Developer Experience

Setting up GPU instances on GCP can be a nightmare, especially if you're new to Google Cloud. First, you need to request GPU quotas, which can take days or even weeks to approve. Then you're dealing with complex VM configurations, networking setup, and driver installations.

We've seen developers spend entire days just trying to get a working GPU environment on GCP. The A100 driver issues and troubleshooting CUDA problems on a cloud VM are nobody's idea of fun.

Thunder Compute gets rid of that friction.

Here's what getting started on Thunder Compute looks like:

- Install our VS Code extension.

- Click "Launch Instance."

- Select your GPU type.

- Start coding in less than 30 seconds.

And here's a look at GCP setup:

- Create Google Cloud account and billing.

- Request GPU quotas and wait for approval.

- Go through complex console to create VM.

- Configure networking and firewall rules.

- SSH into instance and install CUDA drivers.

- Set up development environment manually.

- Debug and troubleshoot configuration issues.

The difference in developer experience is night and day. We've optimized every step of the process because we know your time is better spent building models than fighting with infrastructure.

Performance and Scalability Features

GCP does offer impressive raw power. You can get as many as 16 A100s in a single VM and use their global network infrastructure. For massive enterprise workloads, that scale can be valuable.

But most AI developers don't need 16 GPUs in a single instance. They need flexible, cost-effective access to one to four GPUs that they can scale up or down based on their current project needs.

Thunder Compute focuses on the sweet spot that covers 90% of AI development use cases. Our instances support one to four GPUs with the ability to swap hardware configurations on-the-fly. This means you can upgrade from an A100 to an H100 for a bigger training job in just one click.

And our persistent storage means that your datasets and model checkpoints survive between sessions. You can stop an instance when you're not using it and restart exactly where you left off. Try doing that smoothly on GCP.

We also provide high-speed networking (7 to 10 Gbps) that's optimized for ML workloads. Fast data transfer matters when you're moving large datasets or saving frequent checkpoints during training.

The ideal GPU cloud needs to balance performance with cost-effectiveness. Thunder Compute delivers enterprise-grade performance without the enterprise complexity or pricing.

Why Thunder Compute Is a Better Choice

Thunder Compute delivers what most AI developers need: reliable, affordable GPU access with an amazing developer experience.

The 80% cost savings alone make Thunder Compute compelling, but it's the combination of price and usability that makes us the better choice.

GCP makes sense if you're a large enterprise that needs deep integration with Google's ecosystem. But if you're a researcher, startup, or individual developer who wants to focus on building AI rather than managing infrastructure, Thunder Compute is the obvious choice.

We've designed our service for the AI development workflow. Every feature, from VS Code integration to hardware swapping, exists because developers told us they needed it.

The ML hardware requirements haven't changed, but how you access that hardware should be simple and affordable.

FAQ

Q: How much can I save switching from GCP to Thunder Compute?

A: You can save from 78% to 87% on GPU costs, with A100 80GB instances at $0.78 per hour versus GCP's ~$3.50 per hour, and H100s instances at $1.89 per hour versus GCP's ~$11 per hour. This translates to five to seven times more GPU hours for the same budget.

Q: How long does it take to get started with Thunder Compute?

A: Thunder Compute gets you coding on a GPU in less than 30 seconds through our VS Code extension.

Q: What's included in Thunder Compute's pricing that GCP charges extra for?

A: Thunder Compute includes high-speed networking, snapshots, and all developer tools in our quoted hourly rate, while GCP charges separately for VM compute, storage, networking, and data egress on top of GPU costs.

Q: Can I upgrade my GPU type without losing my work and environment?

A: Yes, Thunder Compute allows you to swap GPU types with one click while preserving your entire environment, data, and installed packages.

Final Thoughts on Choosing Between Thunder Compute and GCP for GPU Computing

The cost difference alone tells the story, but it's really about getting back to what matters: building great AI instead of wrestling with infrastructure. Thunder Compute gives you enterprise-grade GPUs without the enterprise headaches or pricing. So more of your budget goes toward training models, not toward paying cloud bills. And the 30-second setup means you can spend your time coding, not configuring.