What is Ollama? Complete Guide to Local AI Models (December 2025)

You've probably hit that frustrating wall where cloud AI APIs are draining your budget, or maybe you're wondering if there's a way to run powerful models without sending your data to third parties. If you're looking into what is Ollama and how it could solve these exact problems, you're in the right place.

Ollama has become one of the most compelling solutions for running AI models locally, and we're going to break down everything you need to know to get started, as well as the best way to run your Ollama workflows on a dedicated GPU cloud.

TLDR:

- Ollama lets you run powerful AI models locally without cloud costs or privacy concerns

- You can deploy models like DeepSeek and Llama in minutes with simple commands and no ML expertise

- Local deployment eliminates API fees and keeps sensitive data on your infrastructure

- Thunder Compute provides dedicated GPU resources when you outgrow local hardware limits

- Ollama works for everything from code completion to enterprise chatbots requiring data privacy

What is Ollama

Ollama is a free tool that allows you to run AI models directly on your local machine.

At its core, Ollama is a locally deployed AI model runner. In simpler terms, it lets you download and run AI models on your own machine, without relying on cloud-hosted services. This makes it a powerful alternative to cloud-based AI services for users who value data privacy, cost control, and offline functionality.

Unlike proprietary cloud services, Ollama gives you complete control over your AI models and data.

Ollama represents a fundamental shift toward democratized AI, putting powerful language models directly in the hands of developers without the overhead of cloud dependencies or privacy concerns.

What sets Ollama apart is its focus on simplicity. You don't need extensive machine learning expertise to get started. The installation process is straightforward, and within minutes, you can have a capable LLM running on your laptop.

How Ollama Works

Ollama creates an isolated environment to run LLMs locally on your system, which prevents any potential conflicts with other installed software. This environment already includes all the necessary components for deploying AI models.

The process is remarkably straightforward. First, you pull models from the Ollama library using simple commands. Then, you run these models as-is or adjust parameters to customize them for specific tasks. After the setup, you can interact with the models by entering prompts, and they'll generate responses just like ChatGPT or Claude.

One of the standout features of Ollama is its use of quantization to optimize model performance. Quantization reduces the computational load, allowing these models to run efficiently on consumer-grade laptops and desktops. This is no small feat, considering the size and complexity of LLMs.

The magic happens through model compression techniques that maintain most of the original model's performance while dramatically reducing memory requirements. Models requiring tens of GBs of VRAM can often be compressed to run on 8GB consumer hardware.

Ollama also handles all the technical complexity behind the scenes. You don't need to worry about CUDA installations, dependency management, or model optimization. The tool manages everything from model loading to memory allocation automatically.

For teams that need consistent performance or are working with particularly demanding models, cloud GPU services like those offered by Thunder Compute can provide dedicated hardware while maintaining the control benefits of local deployment.

Available Models on Ollama

Ollama supports a wide range of model families, from lightweight assistants to powerful reasoning LLMs. Here are some of the most popular options:

| Model Family | Best For | Parameter Sizes | Key Features |

|---|---|---|---|

| DeepSeek | Complex reasoning, math problems | 1.5B-67B | Advanced problem-solving skills |

| Llama | General conversation, versatile tasks | 7B-405B | Meta's foundation models, highly capable |

| Mistral | Fast performance, balanced tasks | 7B-22B | Optimized for speed and accuracy |

| Gemma | Lightweight applications | 2B-27B | Google's fast models |

| CodeLlama | Programming assistance | 7B-34B | Specialized for code generation |

These model families cover most use cases: lightweight models for simple tasks, mid-range for versatility, and larger models for advanced reasoning or coding.

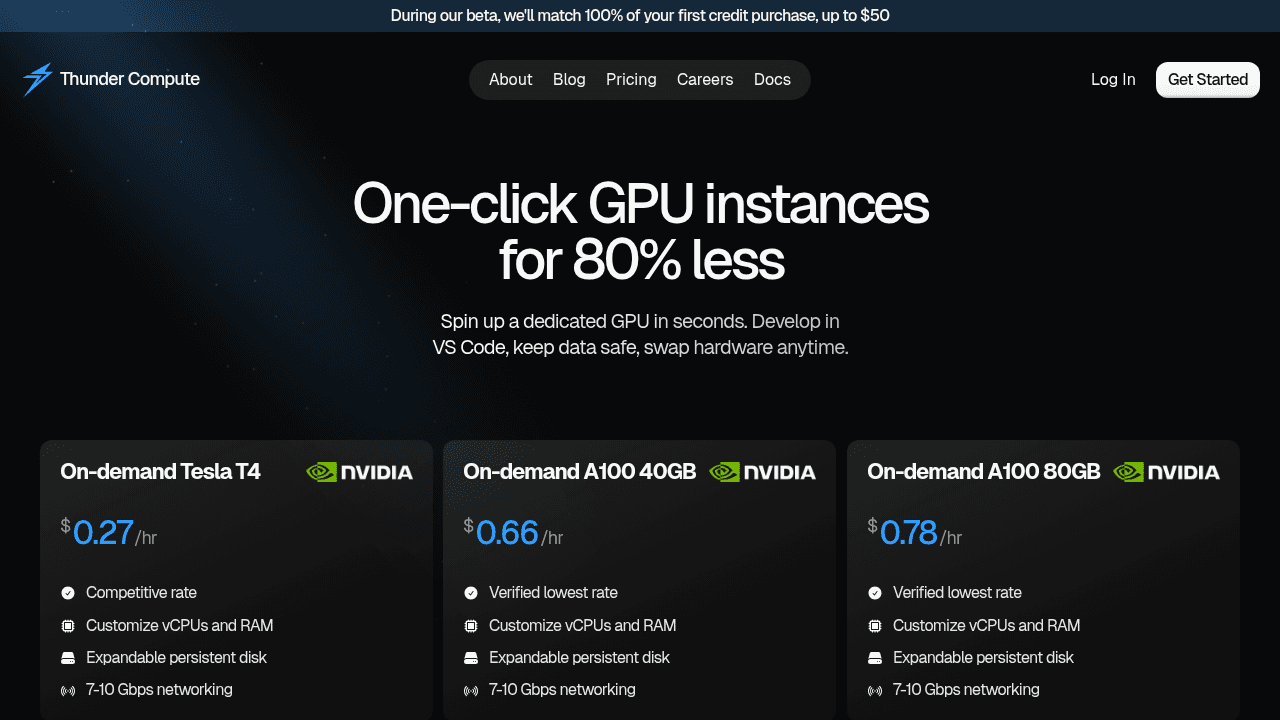

When workloads exceed what local machines can handle, Thunder Compute's on-demand instances (A100s, H100s, etc.) let you scale seamlessly without losing Ollama’s privacy and control benefits.

Use Cases and Applications

Ollama serves diverse use cases across different industries and user types, making it valuable for both individual developers and enterprise teams.

Development and Research: Prototyping AI applications becomes much more accessible when you don't need to worry about API costs or rate limits. Developers use Ollama for code completion, programming assistance, document analysis, and research with proprietary datasets requiring privacy.

Business Applications: Companies in controlled industries particularly benefit from Ollama's local deployment model. Customer service chatbots for sensitive industries, internal knowledge base querying, content generation for marketing teams, and legal document analysis all become possible without data leaving the organization.

Educational Use: Educational institutions love Ollama because it eliminates ongoing costs while providing reliable access to AI features. Teaching AI concepts without cloud costs, student projects with guaranteed uptime, and academic research with data privacy requirements are all common use cases.

One of the most compelling reasons to execute models locally is the control it provides over sensitive data. Many industries, especially finance, healthcare, and government sectors, are subject to strict data privacy regulations, making cloud solutions risky.

Ollama makes sure that the entire process happens locally, avoiding the compliance issues tied to third-party servers, reducing latency, and minimizing reliance on internet connectivity.

Thunder Compute: Scaling Your Ollama Workflows

While Ollama excels at making AI models accessible on local hardware, many users eventually encounter limitations with their local setup. Whether it's insufficient GPU memory for larger models, inconsistent performance, or the need for team collaboration, there comes a point where dedicated infrastructure becomes valuable.

Thunder Compute bridges the gap between local development and enterprise-scale AI deployment. Our on-demand GPU instances provide the perfect environment for running Ollama workloads that have outgrown local hardware constraints.

With support for high-end GPUs like A100s and H100s, persistent storage, and VS Code integration, developers can scale their Ollama projects while maintaining the control and flexibility they value. You get the same privacy benefits of local deployment with the performance of enterprise hardware.

The transition from local Ollama development to cloud-based scaling is smooth. You can develop locally, then deploy to Thunder Compute instances when you need more power, all while using the same tools and workflows.

For teams building serious AI applications, Thunder Compute offers the reliability and performance of cloud infrastructure at a fraction of the cost of traditional providers, making it the natural next step for Ollama users ready to scale.

FAQ

What hardware do I need to run Ollama effectively?

Ollama can run on consumer-grade laptops with 8GB of RAM, but performs best with dedicated NVIDIA or AMD GPUs. For larger models requiring substantial GPU memory, you may need high-end hardware or cloud GPU instances to get smooth performance.

How does Ollama compare to cloud AI services like ChatGPT?

Ollama runs entirely on your local machine, providing complete data privacy and no ongoing costs, while cloud services require internet connectivity and charge per use. However, cloud services typically offer more powerful models and don't require local hardware setup.

Can I use Ollama for commercial applications?

Yes, Ollama can be used for commercial purposes without licensing fees. This makes it particularly attractive for businesses that need AI features while maintaining data privacy and controlling costs.

When should I consider moving from local Ollama to cloud infrastructure?

Consider cloud infrastructure when your local hardware can't handle larger models, you need consistent performance for production workloads, or your team requires collaborative access to AI resources that exceed what local machines can do.

Final thoughts on running AI models locally with Ollama

Ollama puts powerful AI tools directly on your machine without the ongoing costs or privacy concerns of cloud services. Whether you're building prototypes or production applications, starting locally gives you complete control over your data and models. When your projects outgrow local hardware limitations, Thunder Compute provides the dedicated GPU resources you need while maintaining that same level of control. The path from local experimentation to scalable AI deployment has never been more straightforward.