AI GPU Rental Market Trends December 2025: Complete Industry Analysis

Renting AI GPUs for your ML projects can be expensive, and the pricing from major cloud providers can make even simple fine-tuning jobs feel like a luxury. We're seeing developers get priced out of experimenting with larger models, which is exactly why the AI GPU rental market is shifting so dramatically right now.

TLDR:

- AI GPU rental market exploded from $3.34B to projected $33.91B in 2032, driving down costs.

- H100 prices dropped from $8/hr to $2.85-3.50/hr; A100s now available at $0.66-0.78/hr.

- Thunder Compute offers lowest market rates with 45-61% savings vs competitors.

- Supply chain improvements eliminated availability constraints that plagued 2023-2024.

- Market stabilizing around developer experience and reliability over pure price competition.

Market Growth Surge in the AI GPU Rental Space

The GPU rental market is growing from $3.34 billion to a projected $33.91 billion from 2023 to 2032.

This explosive growth is creating opportunities for developers, researchers, and startups who previously couldn't afford enterprise-grade GPU access. The GPU marketplace shows how democratized access is becoming the norm rather than the exception.

LLM development, computer vision applications, and the growth of multimodal AI systems that require serious computational horsepower are driving this surge. Every startup wants to fine-tune their own models, and every researcher needs access to A100s or H100.

The GPU-as-a-service sector is projected to hit $12.26 billion by 2030, up from $3.79 billion in 2023. This growth benefits users by creating competitive pressure that drives down prices and improves service quality.

And this market expansion has allowed providers like us to offer affordable GPU cloud at price points that would've been impossible two years ago.

The competitive market is forcing new developments in orchestration, performance, and user experience. This means better tools and lower costs.

Current GPU Pricing Trends December 2025

H100 pricing has seen the most dramatic shifts, dropping from historical peaks of $8 per hour to a more reasonable $2.85-$3.50 range across most providers. Some specialized providers are now offering H100 access at around $1.50-$2.00 per hour, compared with $5-6 per hour just twelve months ago.

But most developers don't need H100s. A100s deliver exceptional value for the majority of AI development tasks.

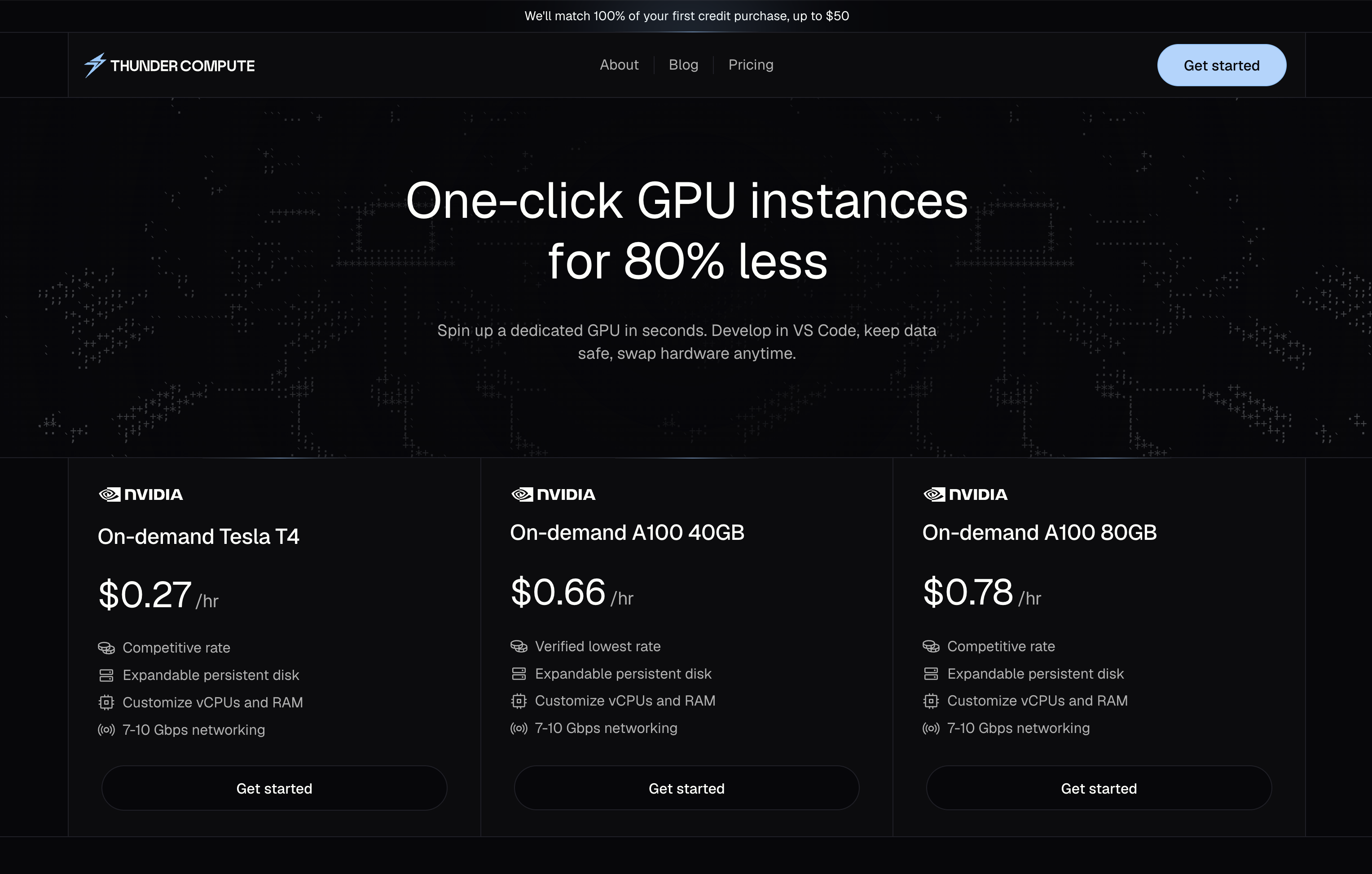

That's where Thunder Compute's pricing strategy shines. We're offering A100 40GB instances at $0.66/hour and A100 80GB at $0.78/hour. These aren't promotional rates or spot pricing. These are standard on-demand prices.

The H100 price analysis shows how market dynamics are shifting. Major cloud providers like AWS have cut costs for H100, H200, and A100 instances by up to 45%, according to recent industry reports.

This pricing pressure creates opportunities for developers who were previously priced out of GPU computing. Our A100 pricing comparison shows how big these savings can be.

| GPU Type | Thunder Compute | Typical Competitor | Savings |

|---|---|---|---|

| A100 40GB | $0.66/hr | $1.20-1.50/hr | 45-56% |

| A100 80GB | $0.78/hr | $1.64-2.00/hr | 52-61% |

| H100 80GB | Coming Soon | $2.85-3.50/hr | TBD |

A100 vs H100 Cost Analysis

The H100 offers up to 4x the performance of the A100 in specific workloads, particularly those that can use its higher bandwidth memory and improved performance from NVIDIA's Hopper architecture. But performance per dollar tells a different story.

For most fine-tuning tasks, model inference, and development work, A100s provide the optimal balance of power and cost. The H100 GPU price guide breaks down the hidden costs that can make H100 deployments expensive beyond the hourly rate.

We focus on making A100 access affordable and reliable.

The cost-performance analysis becomes even more compelling when you factor in development time. Our detailed performance comparison shows how professional-grade GPUs with larger VRAM pools allow workflows that aren't possible on consumer hardware.

For workloads that need H100 performance, the current market rates of $2.85-3.50/hour represent good value. But for the 80% of AI development tasks that run perfectly well on A100s, our pricing creates substantial savings.

GPU Availability and Supply Chain Updates

Supply chain dynamics have improved dramatically throughout 2025. Google Cloud is making their latest A4 B200 and A4X GB200 instances generally available, competing directly with AWS, Azure, and Oracle Cloud offerings that provide 400Gbit/s per GPU connectivity.

This increased competition among hyperscalers is creating better availability for specialized providers like us. The GPU cloud rating system shows how different approaches to GPU orchestration affect real-world availability and performance.

The key insight is that software-driven orchestration can achieve better GPU utilization rates than traditional approaches, which translates into better availability and lower costs for end users.

Our orchestration technology allows near 100% utilization of GPU resources, so we can offer consistent availability even during peak demand periods. This is a major advantage over marketplace-style providers and their sometimes unpredictable availability.

When you're choosing between providers, availability matters as much as price. Our GPU selection guide helps you understand which hardware you need and which providers can reliably deliver it.

Major Cloud Provider Competition

The competitive market has three distinct tiers. AWS, Microsoft, and Google dominate the enterprise market with full service offerings but premium pricing. Specialized providers like CoreWeave focus on "no-compromises" cloud computing optimized for large-scale training and inference, featuring cutting-edge NVIDIA GPUs including the latest Blackwell instances.

Then there's the new tier of cost-focused providers that combine accessibility with competitive pricing. This is where Thunder Compute operates, offering the reliability and ease of use you'd expect from major cloud providers, combined with the specialized focus and aggressive pricing of boutique GPU providers.

The GPU market evaluation report shows how different providers are positioning themselves. Major clouds compete on enterprise features and global reach. Specialized providers compete on performance and cutting-edge hardware access.

We compete on removing friction and delivering exceptional value. Our VS Code integration, one-click deployment, and persistent storage come standard, not as premium add-ons. When you compare total cost of ownership including setup time and day-to-day overhead, Thunder Compute often delivers better value even before considering our price.

The Lambda Labs alternatives analysis shows how different providers serve different use cases. Our sweet spot is developers and teams who want professional-grade GPU access without enterprise complexity or pricing.

Specialized GPU Provider Analysis

Let's look at specialized provider options. Vast.ai operates as a decentralized marketplace where individuals rent out idle GPUs at much lower prices than traditional cloud providers. This works well for spot workloads but can be unreliable for production use.

Lambda Labs offers a GPU cloud tailored for AI developers with simple workflows and high-end hardware. They're known for hybrid cloud and colocation features, serving customers who need dedicated infrastructure.

RunPod provides both on-demand and serverless GPU access with a focus on inference workloads. Their cloud GPU provider comparison shows how different approaches serve different needs.

AI Startup GPU Requirements Evolution

AI startups have unique requirements. They need production-grade infrastructure for rapid iteration and deployment, but they're running lean and can't commit to long-term contracts.

Training complex models like LLMs from scratch requires thousands of GPUs, but most startups are fine-tuning existing models or building specialized applications.

This is where Thunder Compute's flexible scaling model shines. You can start with a single A100 for prototyping and experimentation, then scale up to multiple GPUs when you're ready for larger training runs. No long-term commitments, no complex configurations.

The GPU machine learning comparison between on-premises and cloud approaches shows why startups increasingly choose cloud-first strategies. The capital requirements and complexity of managing your own GPU infrastructure don't make sense for most early-stage companies.

Our startup-focused GPU cloud guide breaks down the specific considerations for Series A and Series B companies. The ability to iterate quickly, scale resources on demand, and maintain cost predictability often matters more than having access to the absolute latest hardware.

The GPU provider comparisons show how different companies approach GPU infrastructure decisions. The common thread among successful AI startups is choosing providers that allow rapid experimentation without extra overhead.

Regional Market Differences and Global Expansion

GPU rental prices vary widely by region, creating opportunities for cost optimization. U.S. East Coast deployments average $5.76 per unit per day, while West Coast deployments run $6.60 per unit per day as of March 2025. These regional price variations can add up to substantial differences for long-running workloads.

North America currently holds the largest market share, but Asia Pacific is projected to be the fastest-growing region. This geographic expansion is creating new opportunities for providers who can deliver consistent experiences across regions.

Thunder Compute's global accessibility provides consistent performance, developer experience, and pricing across regions

For developers and startups, the key is finding providers who can deliver consistent experiences without requiring you to become experts in global infrastructure management.

Technology Infrastructure Improvements

Recent advances in GPU networking, cooling, and data center performance are allowing better price-performance ratios across the industry. NVIDIA's vision for "AI factories" includes large-scale data centers with advanced power and cooling systems, such as the Lancium Clean Campus in Texas scaling from 200 MW to 1.2 GW by 2026, hosting up to 50,000 GPUs per building.

These infrastructure improvements create opportunities for better GPU use and improved cost economics. The data center market trends show how power improvements, cooling advances, and networking progress are reducing costs.

Thunder Compute's orchestration technology allows features like swapping GPU types, persistent storage across instance lifecycle, and near-instant scaling. These features come from software improvements rather than hardware scale, which allows us to pass savings directly to users.

December 2025 Market Predictions and Outlook

Looking ahead to late 2025 and early 2026, several trends are coming together to create a more mature and competitive GPU rental market. Prices are expected to stabilize with potential discounts from new GPU releases, while analysts predict relatively stable H100 prices with only minor adjustments despite ongoing enterprise demand.

The GPU-as-a-service market analysis suggests that competition will increasingly focus on developer experience, reliability, and specialized features rather than price competition alone.

This plays to Thunder Compute's strengths. We've built our service around developer experience from day one, with VS Code integration, one-click deployment, and persistent storage as standard features. As the market matures, these differentiators become more important than pure price competition.

The supply chain improvements and increased competition among hardware providers should continue to benefit end users through better availability and more predictable pricing. The wild price swings and availability constraints of 2023-2024 are giving way to a more stable market.

FAQ

Q: What's the main difference between A100 and H100 GPUs for AI development?

A: H100s offer up to 4x the performance of A100s in specific workloads, particularly those using higher bandwidth memory and NVIDIA's Hopper architecture. However, A100s provide better cost-performance for most fine-tuning tasks, model inference, and development work, making them the optimal choice for 80% of AI development tasks.

Q: How much can I save by switching from major cloud providers to specialized GPU rental services?

A: You can typically save 45-61% on GPU costs by switching from major cloud providers to specialized services like Thunder Compute. For example, A100 80GB instances cost around $0.78/hour compared to $1.64-2.00/hour from typical competitors, representing savings of 52-61%.

Q: What should I consider when choosing between different GPU rental providers?

A: Focus on three key factors: pricing transparency, availability reliability, and developer experience features. Look for providers offering consistent on-demand pricing (avoid only spot rates), reliable availability during peak demand, and built-in conveniences like VS Code integration, persistent storage, and one-click deployment.

Final thoughts on AI GPU rental market shifts

The AI GPU rental market has changed dramatically, with prices dropping and availability improving across the board. Whether you're fine-tuning models or running experiments, you can now rent AI GPUs at prices that make sense for your projects.