How to Pick the Right GPU for AI Workflows 2025

As someone who's obsessed with helping teams with their GPU compute, I've seen the same costly mistake repeatedly: choosing the wrong GPU and watching training times explode or projects crash due to memory limitations. In practice, most teams start with a single GPU for prototyping, then scale horizontally to 4 or 8 GPUs when moving into production fine-tuning. Most teams struggle with which GPU they need because the requirements vary dramatically between fine-tuning existing models, running inference, and training from scratch. In this guide, I'll walk you through the exact framework for matching your AI workload to the optimal GPU configuration, from calculating VRAM requirements to scaling resources dynamically as your project evolves.

TLDR:

- Match VRAM to your model size: a 7B model can often be fine-tuned on a single 24-40GB GPU.

- VRAM needs scale by workload: inference requires the least, fine-tuning typically needs ~1.5-2x inference VRAM, and full training can be 4x heavier

- Scaling usually means multi-GPU: moving from 1 GPU to 4-8 A100s/H100s is more common than upgrading to a single larger GPU.

- Thunder Compute lets you swap GPU types mid-project without losing your environment

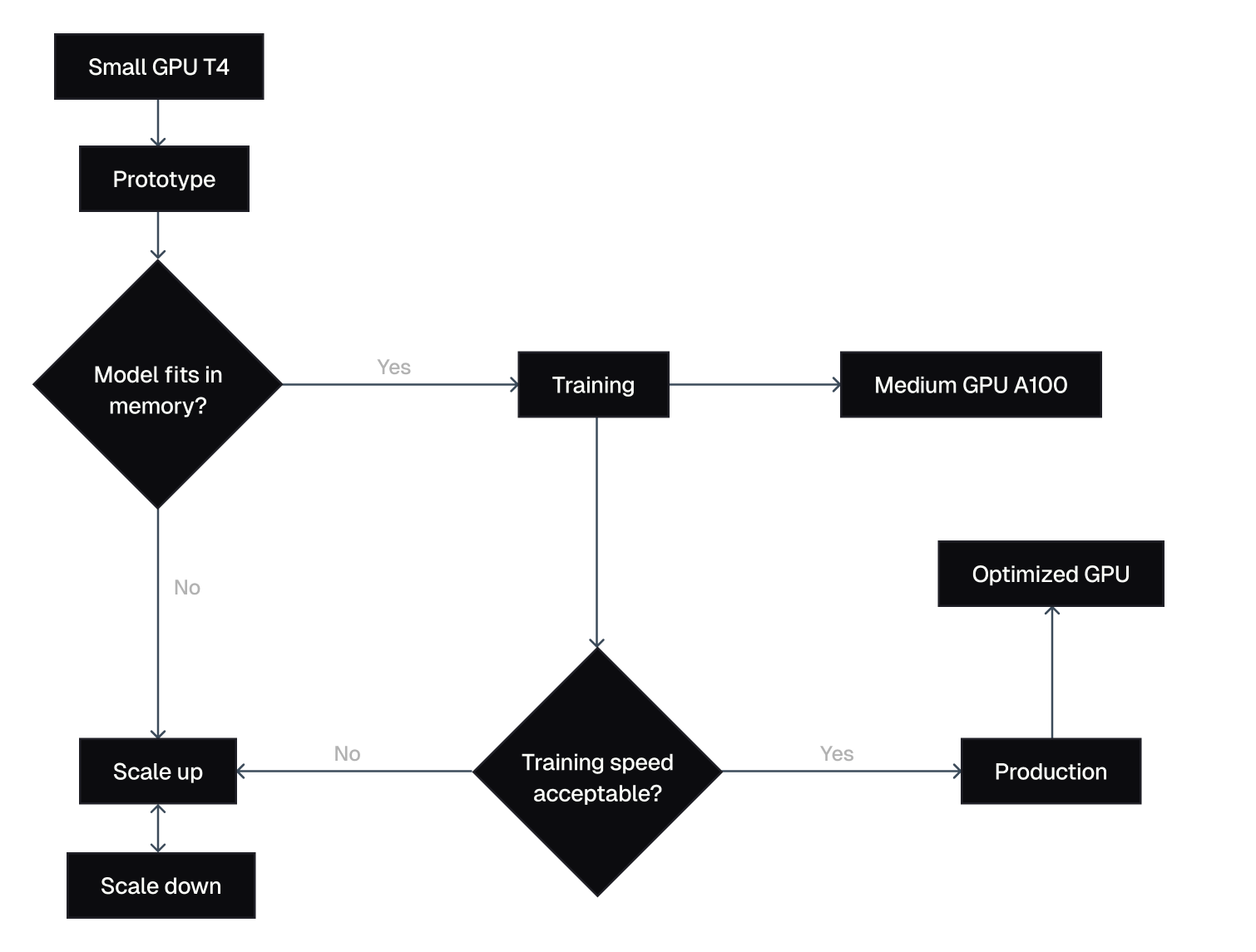

- Start small for prototyping, scale up for training, then optimize for production costs

Why GPU Selection Matters for AI Projects

The GPU infrastructure you choose can make or break your AI project's success. We've seen teams waste months of development time and thousands of dollars simply because they picked the wrong hardware for their workload.

Iteration Speed Impact

Your GPU choice directly determines how quickly you can run fine-tuning jobs and re-train variations of your model. A fine-tune that finishes in 2 hours on an A100 might take 20 hours on a T4. That difference isn’t just convenience, it’s the difference between running multiple experiments in a single day versus waiting days for results. Faster iteration means faster model improvement, lower overall costs, and quicker deployment into production.

Memory Constraints

VRAM limitations are the most common project killer we encounter. If your model doesn't fit in GPU memory, it simply won't fine-tune or train. Period. You can't work around insufficient VRAM with clever optimization tricks beyond a certain point.

Cost Savings

The wrong GPU wastes money in multiple ways. Overpowered GPUs burn budget on unused features, while underpowered ones extend training times and increase total costs. GPU cloud services help optimize this balance by letting you match resources to workloads precisely.

Scalability Limitations

Scaling from prototype to production usually means moving from 1 GPU to 4-8 GPUs. This is where A100s and H100s shine. They’re designed for efficient multi-GPU scaling with NVLink and high memory bandwidth.

Framework Compatibility

Different GPUs have varying levels of support for ML frameworks and optimization libraries. Newer architectures often provide better performance with the latest versions of PyTorch, TensorFlow, and specialized libraries like Hugging Face Transformers.

The good news is that cloud GPU services eliminate much of this risk. You can test different configurations, measure actual performance on your workloads, and adjust resources as needed without massive upfront investments.

Assess Your VRAM Requirements

Model Size Calculations

Full model training is rare and extremely resource-intensive. A 7B parameter model can require ~112GB VRAM for full training, which usually means multi-GPU clusters. Most teams don’t do this from scratch.

For inference, you typically need: Model Parameters x 2 bytes (for half-precision). That same 7B model requires about 14GB for inference, making it feasible on smaller GPUs.

Fine-tuning VRAM Needs

For most teams, fine-tuning a pre-trained model is the practical path. Parameter-efficient methods like LoRA and QLoRA cut VRAM requirements dramatically:

- LLaMA 7B can be fine-tuned on a single 24-40GB GPU.

- LLaMA 13B typically fits on an A100 40GB.

- Even larger models can often be fine-tuned across 2-4 GPUs with data parallelism.

Instead of the 4x VRAM multiplier that full training requires, fine-tuning often falls within 1.5-2x the inference VRAM footprint.

Batch Size Impact

Larger batch sizes improve training speed but require more VRAM. If you're working with limited memory, you can use gradient accumulation to simulate larger batches while using smaller actual batch sizes.

Popular Model VRAM Requirements

Here are approximate VRAM needs for common models:

| Model | Inference VRAM | Fine-tuning VRAM (LoRA/QLoRA) |

|---|---|---|

| GPT-2 (1.5B) | 3GB | ~6GB |

| LLaMA 7B | 14GB | ~24GB (40GB recommended for headroom) |

| LLaMA 13B | 26GB | ~40GB |

| LLaMA 70B | 140GB | Multi-GPU (4-8x A100/H100) |

When fine-tuning pushes past single-GPU limits, the natural step isn’t upgrading to a larger single card, but moving to 4-8 GPUs. This is where NVLink on A100s and H100s really matters, allowing large-batch or large-model fine-tuning without rewriting your workflows.

Optimization Techniques

Several techniques can reduce VRAM requirements:

- Mixed precision training (FP16/BF16)

- Gradient checkpointing

- Model parallelism across multiple GPUs

- Parameter-efficient fine-tuning (LoRA, QLoRA)

- Gradient accumulation

Understanding your actual VRAM needs helps you choose the most cost-effective GPU without overprovisioning resources.

Choose the Right GPU Architecture

Different GPU architectures excel at different types of AI workloads. Understanding these strengths helps you optimize both performance and cost.

T4 Architecture

T4 GPUs are the entry-level option for AI workloads. They’re cost-effective for inference, prototyping, and fine-tuning smaller models. With QLoRA and aggressive quantization, it’s technically possible to fine-tune a 7B model on a T4, but performance is limited and not practical for production.

T4s shine for inference workloads where you need consistent performance at low cost. They're also excellent for prototyping and development before scaling to larger GPUs for production training.

A100 Architecture

A100 GPUs are the sweet spot for most AI training workloads. Available in 40GB and 80GB VRAM configurations, they provide third-generation Tensor cores and much higher memory bandwidth than T4s.

The 80GB variant handles most practical training scenarios, including fine-tuning AI models and training medium-sized models from scratch. A100s also support multi-instance GPU (MIG) technology, allowing you to partition a single GPU into smaller instances for better resource use.

H100 Architecture

H100 GPUs are the cutting edge, designed for the largest and most demanding AI workloads. They feature fourth-generation Tensor cores, massive memory bandwidth, and advanced interconnect technologies for multi-GPU scaling.

H100s excel at training very large models, running inference on massive language models, and research workloads that push the boundaries of what's possible. However, they're overkill for most practical applications and much more expensive.

Architecture Selection Guidelines

Choose T4 for:

- Inference workloads

- Small model training (under 1B parameters)

- Development and prototyping

- Computer vision tasks

- Budget-conscious projects

Choose A100 for:

- Medium to large model training

- Fine-tuning advanced models

- Production inference for large models

- Multi-GPU training setups

- Most commercial AI applications

- Most production teams don’t just use one A100, they scale out with 4-8 for fine-tuning and large-batch workloads

Choose H100 for:

- Cutting-edge research

- Training models over 70B parameters

- High-throughput inference for very large models

- When you need the absolute best performance

- Unless you’re training >70B-parameter models from scratch, scaling multiple A100s delivers better ROI than jumping to a single H100

The flexibility of cloud GPU services means you can start with one architecture and upgrade as your needs grow. This approach minimizes risk and optimizes costs throughout your project lifecycle.

Match GPU Type to Workload Requirements

LLM Training

LLM training demands high VRAM and memory bandwidth. Full training of models over 7B parameters typically requires A100 80GB or H100 GPUs. The massive parameter counts and long sequence lengths create substantial memory pressure.

For LLM training, focus on VRAM capacity over raw compute power. Memory bandwidth also matters a lot because these models spend considerable time moving data rather than computing.

Fine-tuning Workflows

Fine-tuning is more forgiving than full training. Techniques like LoRA allow fine-tuning of large models on smaller GPUs. A100 40GB can handle fine-tuning of most models up to 13B parameters with proper optimization.

Parameter-efficient fine-tuning methods have democratized access to large model customization. While you can fine-tune a 7B model on a T4 with QLoRA, most teams prefer A100 40GB for usable training speeds

Computer Vision Tasks

Computer vision workloads often benefit more from compute power than extreme VRAM capacity. Image classification, object detection, and segmentation models typically fit comfortably in 16-40GB VRAM.

T4 and A100 40GB GPUs handle most computer vision tasks effectively. The choice depends more on training speed requirements than memory constraints.

Inference Workloads

Inference optimization focuses on throughput and latency rather than training performance. T4 GPUs often provide the best cost-performance ratio for inference, especially when serving multiple smaller models or handling variable loads.

For large model inference, A100s provide better throughput per dollar despite higher hourly costs. The key is matching GPU capacity to your expected request volume.

Research and Experimentation

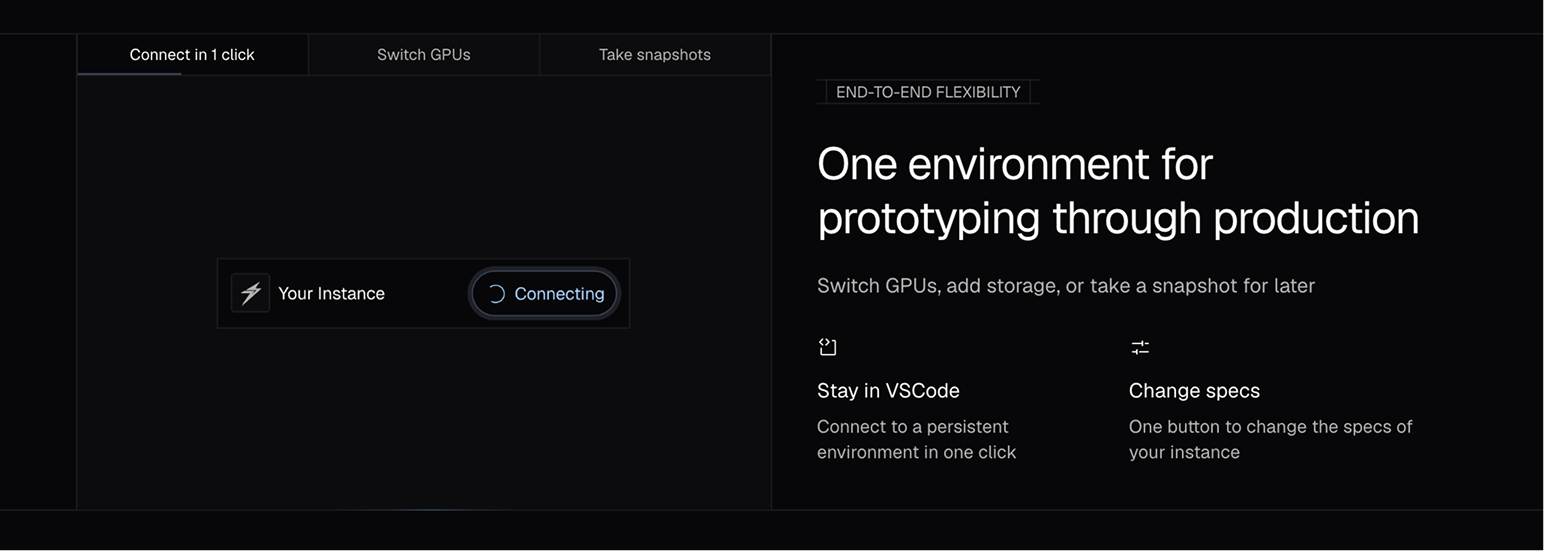

Research workloads benefit from flexibility more than any specific optimization. The ability to quickly switch between GPU types as experiments evolve is important. Thunder Compute excels here by allowing hardware changes without environment reconfiguration.

Multi-GPU Considerations

Some workloads scale well across multiple GPUs while others don't. Model parallelism works well for very large models, while data parallelism suits smaller models with large datasets. A100 and H100 GPUs provide better multi-GPU scaling than T4s.

Understanding your workload characteristics helps you choose the most appropriate GPU type and avoid both performance bottlenecks and unnecessary costs.

Scale and Optimize Your GPU Usage

Effective GPU usage goes beyond initial selection. Monitoring performance, adjusting resources as needed, and optimizing costs throughout your project lifecycle maximizes value.

Performance Monitoring

Track key metrics to understand if your GPU choice is optimal:

GPU utilization: Should stay above 80% during training

Memory utilization: Aim for 85-95% of available VRAM

Training throughput: Measure samples per second or tokens per second

Cost per epoch: Track total fine-tuning and training costs over time

Low GPU utilization often indicates CPU bottlenecks, inefficient data loading, or suboptimal batch sizes. High memory utilization with low compute utilization suggests memory bandwidth limitations.

Advanced Optimization Features

Thunder Compute's GPU orchestration provides unique optimization features:

- Swap GPU types without losing your environment

- Persistent storage maintains data across instance changes

- VS Code integration speeds up development workflows Instance templates accelerate common setup tasks

Scaling Strategies

Plan your scaling approach based on project phases:

Development: Use cost-effective GPUs for code development and small-scale testing Training: Scale to appropriate GPUs based on model size and timeline requirements Production: Optimize for inference throughput and cost savings Maintenance: Use minimal resources for monitoring and occasional retraining

Multi-GPU Scaling

When single GPUs become insufficient, consider multi-GPU approaches:

Data parallelism: Distribute batches across multiple GPUs Model parallelism: Split large models across multiple GPUs

Pipeline parallelism: Process different model layers on different GPUs Hybrid approaches: Combine techniques for maximum performance

The key to successful scaling is maintaining flexibility. Avoid locking into specific hardware configurations too early in your project lifecycle.

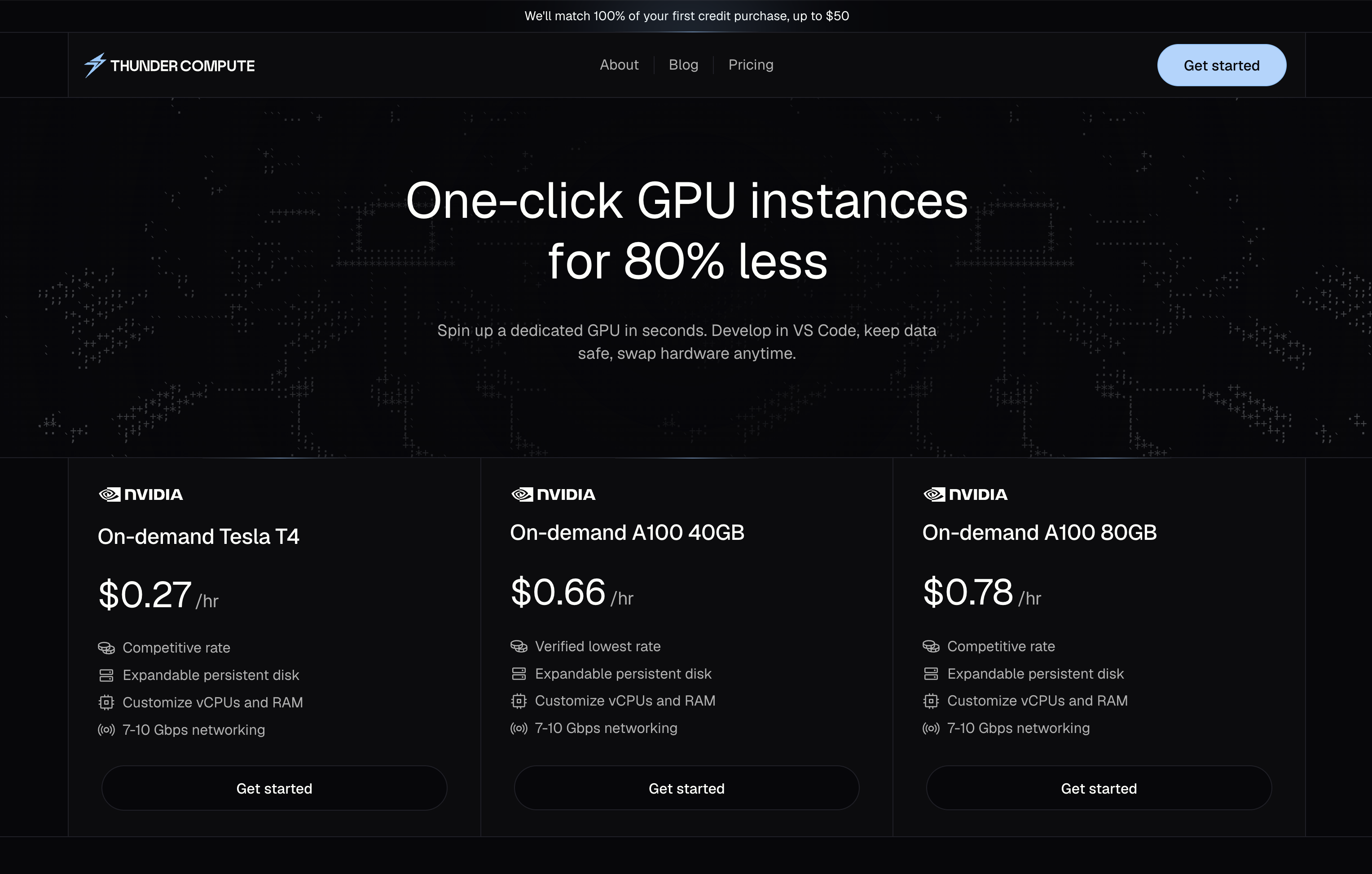

Why Thunder Compute is The Best Option

- Up to 80% cheaper than major cloud providers like AWS. Thunder Compute delivers on-demand GPU instances, including T4, A100 40 GB, and A100 80 GB at significantly lower hourly prices.

- Our pay-per-minute billing model ensures you're only charged for what you use. No more paying for idle minutes.

- One-command provisioning: You can go from CPU-only dev to GPU cluster via CLI in one line. No setup hassle.

- VS Code integration: Spin up, connect, and switch GPUs directly from your IDE. No complex config or deployment needed.

- Persistent storage, snapshots, spec changes: You can upgrade or modify your instance (vCPUs, RAM, GPU type) without tearing down your environment.

FAQ

Q: What's the main difference between T4, A100, and H100 GPUs for AI workloads?

A: T4 GPUs (16GB VRAM) are cost-effective for inference and small model training, A100 GPUs (40GB/80GB VRAM) handle most practical training scenarios including LLM fine-tuning, and H100 GPUs (80GB VRAM) are designed for cutting-edge research and the largest models. The choice depends on your model size, budget, and performance requirements.

Q: When should I switch from a smaller GPU to a larger one during my project?

A: Start with a single GPU for prototyping, then scale out to 4-8 GPUs when fine-tuning at production scale. If your model won’t fit or training/fine-tuning is too slow, that’s when you add more GPUs. Thunder Compute lets you make this jump seamlessly.

Final thoughts on selecting the right GPU for AI workloads

The GPU decision doesn't have to be permanent or perfect from day one. Most teams benefit from starting smaller and scaling up as their models and requirements evolve. If you're still unsure about choosing the right GPU for your specific workload, Thunder Compute's flexible cloud environment removes much of the guesswork for up to 80% off.