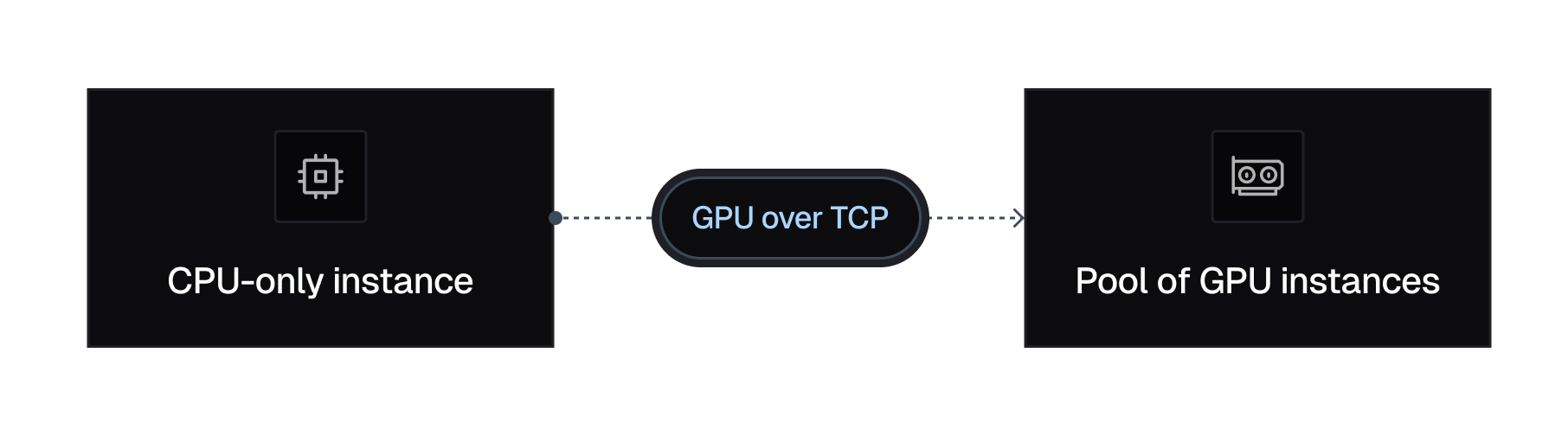

How Thunder Compute works (GPU-over-TCP)

1. Why make GPUs more efficient?

GPUs are expensive and they often sit idle while you read logs or tweak hyper‑parameters. Instead of your GPU sitting there doing nothing, it detaches from your server. When you need a GPU again, your instance transparently claims a GPU, on the order of double-digit milliseconds. This is different from a scheduler like slurm; everything happens behind the scenes, in real time, without waiting.

2. How does Thunder Compute work?

- Network‑attached: The GPU sits across a high‑speed network instead of a PCIe slot. Your virtual machine communicates with the GPU over TCP—the same protocol your browser uses.

- Feels local: You still

pip install torch, usedevice="cuda", and go. Behind the scenes, our instance translates those calls into network messages. - Sole tenancy: When your process runs, it owns the whole GPU. You have access to the full VRAM and compute of the card you pay for. When the process finishes (or you idle out), we can pass that GPU to someone else.

3. Does this affect latency?

~10-20 milliseconds for the initial connection (blinking is ~200 milliseconds), and a scaled runtime increase. Fortunately, most ML jobs spend far more time computing than waiting for data. By strategically optimizing the way your program runs behind the scenes, we can prevent network latency from affecting your GPU computation. Check our docs to see what we've most thoroughly tested.

4. Is Thunder Compute secure?

When your job ends, we wipe every byte of GPU memory and reset the card so no data leaks to the next user. Each process runs in its own sandbox.

Tell us what you need—ping our team in Discord. Spin up an A100 GPU and see how it feels.