Hadoop vs Spark: Performance, Cost, and Use Case Analysis (December 2025)

Apache Hadoop and Apache Spark represent two fundamentally different approaches to big data processing. If you're choosing between them for a project, there are a lot of factors to consider. Both technologies have evolved considerably, and in 2025, the "right" choice for you depends on much more than simply comparing speeds and prices. When considering these options, it's important to also keep in mind that modern GPU-accelerated solutions are making it easier than ever to accelerate either framework with GPU power at a fraction of traditional cloud costs.

TLDR:

- Spark can deliver 10x to 100x faster performance than Hadoop for machine learning and real-time analytics, via in-memory processing.

- Hadoop costs 30% to 40% less for hardware but Spark reduces total ownership costs by 40% to 60% through shorter processing times.

- Hadoop is usually a better choice for large batch jobs and data warehousing, while Spark is usually a better choice for iterative ML workloads and real-time processing.

- Many organizations use hybrid approaches, storing data in HDFS while processing with Spark for optimal cost-performance balance.

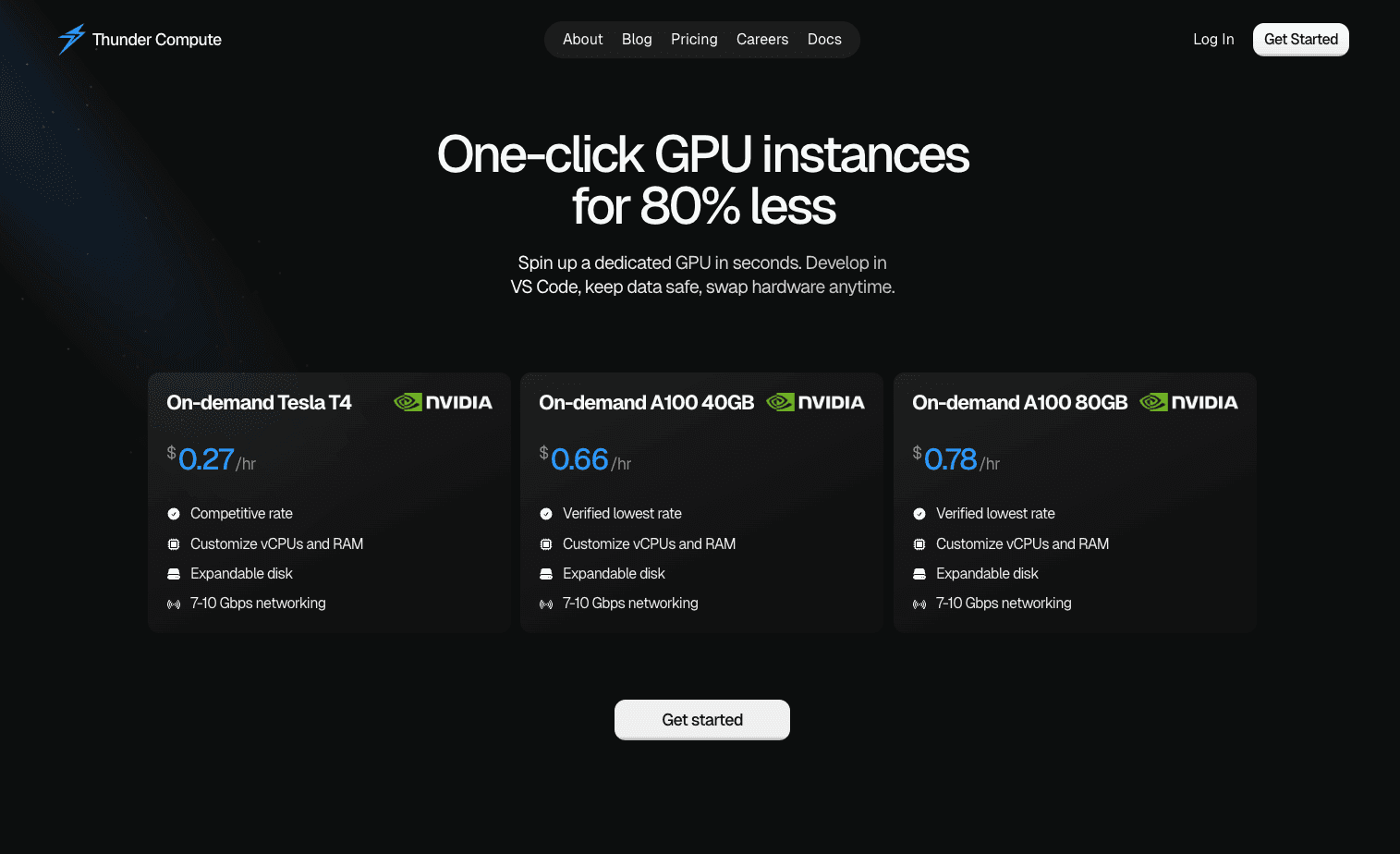

- Thunder Compute provides GPU acceleration for both frameworks at an 80% lower cost than AWS, with A100 instances at $0.78 per hour.

Understanding Hadoop and Spark: The Foundation of Big Data Processing

Hadoop came about as a distributed computing framework built around two core components: the Hadoop Distributed File System (HDFS) for storage and MapReduce for processing. Over time, it evolved into a broader ecosystem that includes YARN for resource management, Hive for SQL-based querying, and Pig for high-level data transformations. Think of Hadoop as a reliable workhorse designed to handle massive datasets across clusters of commodity hardware. It excels at breaking down large problems into smaller chunks, distributing them across multiple machines, and reassembling the results.

Rather than relying on disk-based processing like Hadoop's MapReduce, Spark keeps data in memory between operations. This architectural difference makes Spark much faster for iterative workloads like machine learning algorithms that need to access the same data repeatedly.

The key distinction lies in their processing models. Hadoop MapReduce writes intermediate results to disk after each operation, focusing on reliability over speed. Spark maintains data in RAM whenever possible, thereby dramatically reducing I/O overhead for complex analytical workflows.

Hadoop vs Spark: Performance Analysis

The performance gap between Hadoop and Spark has become even more pronounced in 2025, with Spark delivering up to 100x faster processing for memory-based operations and 10x faster performance even when using disk storage (depending on workload).

This dramatic difference stems from their fundamental architecture. Spark's in-memory processing keeps data in RAM between operations, eliminating the constant disk reads and writes that slow down Hadoop's MapReduce model. When you're running iterative algorithms or complex analytics, this translates to considerable time savings.

Spark's Directed Acyclic Graph (DAG) execution engine provides another performance advantage. It optimizes the entire workflow before execution, identifying opportunities to combine operations and minimize data movement. Hadoop's linear MapReduce model lacks this optimization feature, processing each step independently without considering the broader workflow.

| Processing Type | Hadoop MapReduce | Apache Spark |

|---|---|---|

| Memory usage | Low | High |

| Disk I/O | Heavy | Minimal |

| Iterative tasks | Slower | Up to 100x faster |

| Batch processing | Optimized | Good |

| Real-time processing | Limited | Excellent |

However, Hadoop has advantages in specific scenarios. When you're processing massive datasets that exceed available memory, the performance gap between Hadoop and Spark is a lot narrower. Single-pass batch processing jobs that don't require iterative operations can run well on Hadoop's proven architecture.

In GPU-accelerated analytics environments, the choice between Hadoop and Spark often depends on whether you need the computational power for training models or processing results.

Organizations running machine learning pipelines benefit from pairing Spark with GPU resources for model training, while using Hadoop for large-scale data preparation tasks.

Hadoop vs Spark: Cost Comparison

The cost equation between Hadoop and Spark has shifted dramatically in 2025, with infrastructure pricing and workflow costs creating complex trade-offs that vary greatly by use case.

Hadoop's cost advantage lies in its ability to run on commodity hardware with minimal memory requirements. A typical Hadoop cluster node needs only 8GB to 16GB RAM but requires substantial disk storage for intermediate processing results. This translates to lower upfront hardware costs, with standard servers costing 30% to 40% less than memory-optimized alternatives.

Spark flips this equation by demanding 64GB to 128GB RAM per node for large-scale production clusters but dramatically reducing compute hours through faster processing. While memory-optimized servers cost more initially, Spark's speed often delivers superior ROI for iterative workloads and complex analytics.

However, Hadoop's longer processing times mean higher electricity costs and extended cluster use. A machine learning pipeline that takes 10 hours on Hadoop might complete in two hours on Spark, thereby reducing overhead by 80%.

Organizations may see 40% to 60% lower total cost of ownership with Spark for analytics workloads, despite higher infrastructure costs.

Cloud deployment costs favor Spark even more strongly. Memory-optimized instances cost 20% to 30% more per hour, but the reduced runtime often results in 50% to 70% lower total bills. For teams using GPU rental for accelerated analytics, pairing Spark with cost-effective GPU resources can deliver exceptional value.

The staffing equation also matters. Spark's unified API reduces development complexity, potentially lowering personnel costs, while Hadoop requires more specialized skillsets.

Hadoop Use Cases and Applications

Despite Spark's performance advantages, Hadoop remains the backbone for several enterprise workloads.

Financial institutions continue to favor Hadoop for risk modeling and regulatory compliance. Since banks process years of historical transaction data to identify patterns and calculate risk exposure, Hadoop's ability to cost-effectively handle massive datasets outweighs any concerns around processing speed.

Retail giants also rely on Hadoop for their customer analytics and supply chain optimization. Processing point-of-sale data, inventory records, and customer behavior patterns across thousands of locations requires serious throughput and storage capacity that only Hadoop can deliver efficiently. And seeing as these batch processing workflows typically run overnight, speed becomes less important than reliability and cost.

For healthcare organizations, the Hadoop's ability to handle diverse data formats while maintaining HIPAA compliance makes it ideal for medical data lakes storing thousands, if not millions, of patient histories, imaging data, and research datasets.

Log analysis is another one of Hadoop's strengths. Companies generating terabytes of server logs, application traces, and security events benefit from Hadoop's cost-effective storage, batch processing power, and ecosystem maturity.

Broadly, organizations moving legacy systems or consolidating data centers often choose Hadoop for its proven reliability in handling complex, large-scale transformations where processing time matters less than successful completion.

For teams requiring GPU acceleration alongside big data processing, budget-friendly alternatives can complement Hadoop workflows without the premium costs of traditional cloud providers.

Spark Use Cases and Applications

Spark has become a de facto standard for machine learning and real-time analytics. Its in-memory processing architecture is well suited to the iterative nature of modern AI workloads.

Machine learning is Spark's strongest use case. The MLlib library provides distributed implementations of common algorithms including clustering, classification, and collaborative filtering. Unlike Hadoop, Spark, with its disk-based approach, keeps training datasets in memory across iterations, making it ideal for algorithms that need multiple passes through the same data.

Financial services complement Hadoop with Spark for real-time fraud detection. Credit card transactions flow through Spark Streaming pipelines that apply machine learning models to identify suspicious patterns within milliseconds that would be otherwise impossible with Hadoop's batch-focused architecture.

E-commerce giants use Spark for recommendation engines that process user behavior data in real-time. As customers browse products, Spark analyzes their actions alongside historical purchase patterns to generate personalized recommendations instantly. The ability to combine streaming data with batch-processed historical data makes Spark uniquely powerful for these hybrid workloads.

Healthcare organizations also complement their Hadoop deployments with Spark for clinical decision support systems that analyze patient data streams from monitoring devices. These applications require both the speed to process real-time health signals and the analytical power to compare them against historical patient databases.

For teams building advanced AI applications, pairing Spark with GPU resources for fine-tuning can create some seriously powerful workflows. Organizations can use Spark for data preprocessing and feature engineering, then use appropriate GPU configurations for model training and inference.

Choosing Between Hadoop and Spark for Your Project

Selecting between Hadoop and Spark requires checking your specific project requirements against each technology's strengths. The decision framework should consider data characteristics, processing patterns, budget constraints, and team skills.

Start by analyzing your data processing patterns. If you're running nightly batch jobs that process historical data once and store results, Hadoop's cost-effective approach makes sense. However, if your workflows involve iterative processing, real-time analytics, or machine learning pipelines, Spark's in-memory architecture delivers superior value despite higher infrastructure costs.

| Factor | Choose Hadoop | Choose Spark |

|---|---|---|

| Budget Priority | Cost-effective hardware | Performance over cost |

| Data Processing | Large batch jobs | Real-time/interactive |

| Team Skills | SQL/Java | Python/Scala |

| Infrastructure | On-prem friendly | Cloud-optimized |

| Workload Type | ETL/Data warehousing | ML/Analytics |

That said, hardware costs are only part of the equation. Once total ownership costs like electricity, maintenance, and extended processing times are accounted for, many teams find that a hybrid approach delivers the best balance of performance and efficiency. By storing data in HDFS and processing with Spark, organizations can pair Hadoop's low-cost storage and batch processing with Spark's in-memory speed for analytics and machine learning workloads.

Scale GPU-Intensive Workloads with Thunder Compute

As hybrid data architectures become the norm, the limiting factor often shifts from storage capacity to computational throughput. Both Hadoop and Spark benefit substantially from GPU acceleration, particularly for machine learning, data transformation, and iterative analytical workloads that strain CPU-based clusters.

Thunder Compute provides a cost-effective way to bring this acceleration into existing big data environments. With A100 instances priced at $0.78 per hour (roughly 80% lower than AWS), organizations can deploy GPU-backed clusters without inflating infrastructure budgets, making large-scale analytics and AI training economically viable across both frameworks.

Whether you're processing Hadoop datasets or running Spark jobs, having access to affordable GPU resources can meaningfully accelerate your workflows, lower your total cost of ownership, and improve scalability across a diverse set of data processing needs.

FAQ

What's the main performance difference between Hadoop and Spark?

Spark can deliver 10x to 100x faster performance than Hadoop for iterative workloads and machine learning tasks through in-memory processing, while Hadoop excels at single-pass batch jobs with its reliable disk-based approach.

When should I choose Hadoop over Spark for my project?

Choose Hadoop when you're running large batch processing jobs, need cost-effective data warehousing, or are processing massive datasets that exceed available memory where speed is less critical than reliability and storage costs.

How do the total costs compare between Hadoop and Spark implementations?

While Hadoop hardware costs 30% to 40% less upfront, Spark can typically reduce total ownership costs by 40% to 60% through shorter processing times and lower running overhead, despite requiring more expensive memory-optimized servers.

What GPU requirements do I need for accelerating Spark workloads?

Spark workloads benefit from GPUs for machine learning and data preprocessing tasks, with solutions like Thunder Compute offering A100 instances at $0.78 per hour (80% lower than traditional cloud providers).

Final Thoughts on Choosing Between Hadoop and Spark for Big Data Processing

The choice between Hadoop and Spark comes down to matching your specific workload requirements with each technology's strengths. Spark wins for machine learning and real-time analytics, while Hadoop excels at cost-effective batch processing and data warehousing. Many teams find success combining both approaches for the best balance of cost and performance. When your workloads demand serious computational power, GPU-accelerated solutions can supercharge either framework (or both) without breaking your budget. You'll have the flexibility to scale processing power exactly when you need it.