ROCm vs CUDA: Which GPU Computing System Wins in December 2025?

Should you build your next AI project on CUDA or make the switch to ROCm? CUDA, with its mature ecosystem, dominates in this area. But ROCm, with its open-source flexibility and much lower hardware costs, is also very tempting. Fortunately, the performance gap has narrowed dramatically, and services like Thunder Compute allow you to test both CUDA and ROCm frameworks without breaking your budget. Let’s compare both frameworks to see which best fits your next AI project.

TLDR:

- CUDA now typically outperforms ROCm by 10% to 30%, down from 40% to 50% gaps in previous years.

- ROCm costs 15% to 40% less (depending on the tier) but requires more technical setup expertise.

- PyTorch now officially supports ROCm, though CUDA maintains broader framework compatibility.

- No matter which platform you choose, Thunder Compute provides affordable GPU testing at $0.66 per hour for A100s.

What Is AMD ROCm?

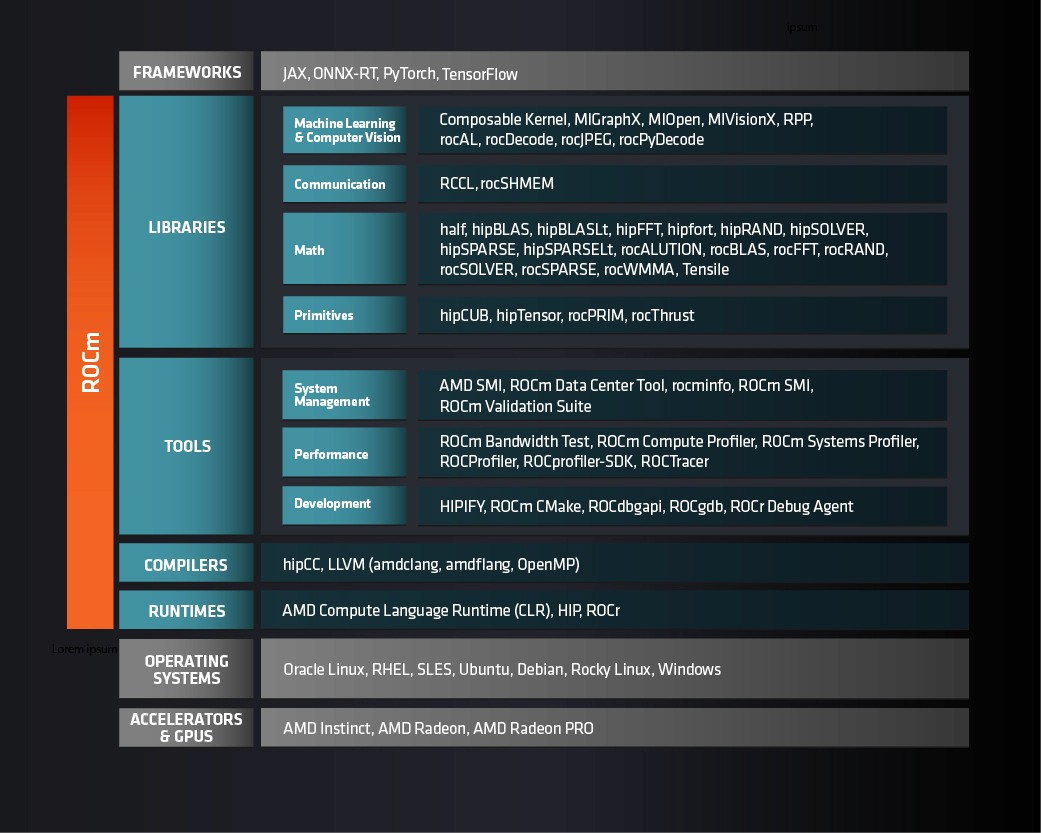

AMD ROCm (Radeon Open Compute) is an open-source alternative to NVIDIA's proprietary CUDA ecosystem. Launched in 2016, ROCm represents an ambitious attempt to break NVIDIA's dominance in the area of GPU computing by offering developers a transparent, community-driven solution.

ROCm’s open-source stack on GitHub lets developers inspect, modify, and contribute to every layer of the system.

ROCm's architecture focuses on HIP (Heterogeneous-compute Interface for Portability), which allows code portability between AMD and NVIDIA GPUs with minimal changes.

ROCm's open-source nature gives developers the freedom to optimize and customize their GPU computing stack.

The official ROCm documentation reveals a complete ecosystem including compilers, libraries, and debugging tools. However, all things considered, ROCm is still playing catching up to CUDA.

What Is NVIDIA CUDA?

NVIDIA CUDA (Compute Unified Device Architecture) launched in 2007 as the first GPU computing framework that changed graphics cards into general-purpose computing powerhouses.

CUDA's proprietary architecture creates a tightly integrated ecosystem between NVIDIA hardware and software. The CUDA Toolkit provides compilers, libraries, and debugging tools optimized for NVIDIA GPUs, delivering performance that's hard to match with generic solutions.

The framework's maturity shows in its extensive library ecosystem: cuDNN for deep learning, cuBLAS for linear algebra, and hundreds of other specialized libraries have been refined over nearly two decades. This depth of optimization explains why CUDA remains a default choice for enterprise AI workloads.

CUDA's closed-source nature allows NVIDIA to optimize performance aggressively without revealing proprietary techniques. The detailed CUDA documentation offers thorough guides.

However, CUDA's proprietary approach does create vendor lock-in.

Performance Comparison: ROCm vs CUDA

Performance benchmarks in 2025 reveal that CUDA maintains its lead, but ROCm has dramatically narrowed the gap. Some recent testing shows that CUDA typically outperforms ROCm by 10% to 30% in compute-intensive workloads.

AMD's MI325X represents a turning point for ROCm performance. The latest hardware delivers competitive results in specific workloads, particularly memory-intensive operations.

| GPU Computing Task | CUDA Performance | ROCm Performance | Performance Gap |

|---|---|---|---|

| Large-scale training | 1.2B ops/sec | 973 ops/sec | 23% faster |

| LLM inference | Baseline | 10-30% slower | Variable |

| General compute | Baseline | 15-25% slower | Improving |

Performance varies greatly by workload type. Modern AI workloads show CUDA's optimization advantage, while memory-bound tasks increasingly favor ROCm's architecture.

Framework-specific optimizations matter enormously. PyTorch ROCm performance has improved substantially, though CUDA still holds advantages in specialized libraries. Image classification benchmarks show this variability across different model types.

The cost-performance equation increasingly favors ROCm for projects where the performance gap doesn't warrant CUDA's premium pricing.

Hardware Support and Compatibility

ROCm Supported GPUs

ROCm 7.0 expanded hardware support greatly, but compatibility is not as broad as it is for CUDA. The official compatibility matrix shows full support for AMD Instinct MI series datacenter cards, including the new MI355X and MI350X accelerators.

Consumer GPU support has improved with preview availability for Radeon RX 7000 and 9000 series cards, with the new Radeon RX 9070 series offering ROCm compatibility out of the box.

ROCm's hardware support is growing rapidly, but still covers only a fraction of the GPU market compared to CUDA's universal NVIDIA compatibility.

Windows support arrived recently, though Linux remains the primary development environment. System-specific limitations mean certain features work better on Ubuntu than other distributions, creating deployment considerations that CUDA users rarely face.

CUDA GPU Coverage

CUDA's hardware support spans NVIDIA's entire GPU lineup, from budget GTX 1650 cards to flagship H100 datacenter accelerators. This universal compatibility across CUDA-capable GPUs provides deployment flexibility that ROCm cannot match.

Every NVIDIA GPU from the past decade supports CUDA, creating a massive installed base. Developers can prototype on consumer RTX cards and deploy on enterprise A100s without code changes, making development workflows smoother.

CUDA's broad hardware support extends to embedded systems, mobile GPUs, and specialized accelerators.

PyTorch and Framework Support

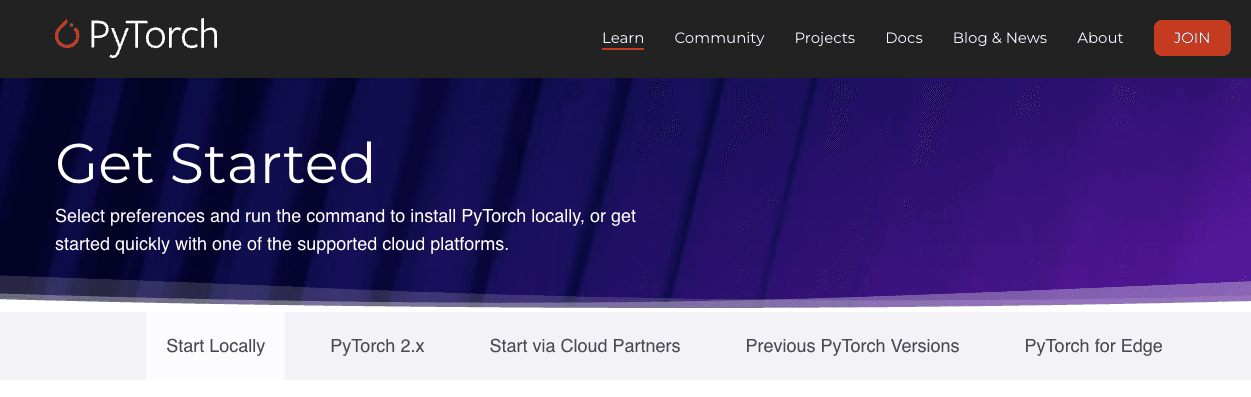

PyTorch now officially supports ROCm on Linux, with Windows builds available in preview. The PyTorch installation page includes ROCm as a first-class option alongside CUDA, marking a major milestone for AMD's ecosystem.

ROCm now supports PyTorch, TensorFlow, JAX, and MosaicML frameworks. However, installation complexity remains higher than CUDA equivalents. PyTorch ROCm requires specific driver versions and careful environment configuration that CUDA installations handle automatically.

Performance gaps persist in framework-specific optimizations. PyTorch ROCm delivers performance just shy of CUDA in most training scenarios (depending on workload), while specialized operations like attention mechanisms still favor CUDA's mature cuDNN integration.

PyTorch's official ROCm support represents AMD's biggest framework victory, bringing professional-grade deep learning to AMD hardware.

CUDA maintains overwhelming framework support across hundreds of libraries. Every major AI framework focuses on CUDA optimization, while ROCm support often arrives months or years later. TensorFlow GPU installation shows this disparity with extensive CUDA documentation versus basic ROCm guidance.

The ecosystem gap affects productivity in major ways. While CUDA developers access cutting-edge frameworks immediately, ROCm developers frequently have to wait for compatibility updates or resort to manual compilation from source code.

Installation and Setup Complexity

CUDA installation has evolved from being notoriously complex to relatively straightforward. The official CUDA installation guide now offers multiple installation paths, including package managers that handle driver conflicts automatically.

Modern CUDA setup benefits from NVIDIA's open-source kernel modules, which reduce compatibility issues with different Linux distributions. Docker containers further simplify deployment by packaging CUDA runtime dependencies into portable images.

However, CUDA still requires careful driver management. Mixing proprietary and open-source drivers can break installations, while version mismatches between CUDA toolkit and drivers create frustrating debugging sessions.

ROCm installation demands more technical expertise. The ROCm installation documentation describes kernel parameter modifications, specific driver configurations, and manual dependency resolution that may intimidate newcomers.

ROCm installation complexity stems from AMD's need to support diverse hardware configurations without NVIDIA's tight hardware-software integration advantages.

Transitioning from existing CUDA environments to ROCm presents challenges. Removing CUDA completely before ROCm installation prevents driver conflicts, but this process can break existing workflows temporarily.

While ROCm's package management has improved with automated scripts, it still requires more Linux expertise than CUDA's increasingly user-friendly installers. This setup complexity often determines GPU choice for teams without dedicated DevOps resources.

Cost Analysis: ROCm vs CUDA Hardware

AMD's ROCm-compatible hardware consistently undercuts NVIDIA's CUDA pricing across all market segments. The cost advantage ranges from 15% to 40% depending on the performance tier, making ROCm an attractive option for budget-conscious AI projects.

Enterprise deployments see substantial savings with AMD's datacenter accelerators. The Instinct MI250 series offers competitive performance at 20% to 40% lower cost than equivalent A100 configurations, though exact pricing varies by volume and vendor relationships.

AMD's aggressive pricing strategy aims to win market share by making GPU computing accessible to organizations priced out of NVIDIA's premium ecosystem.

That said, high-end deployments still favor NVIDIA despite its premium pricing. The H100's superior performance, mature software stack, and optimized datacenter integrations often justify the cost for production workloads where time-to-results outweighs hardware expenses. In fact, when factoring in ROCm's installation complexity, the faster development cycles from CUDA's mature tooling can often offset much of AMD's hardware cost advantage.

Developer Experience and Tooling

NVIDIA's developer tools provide deep insights into GPU performance bottlenecks. These tools integrate smoothly with popular IDEs and offer intuitive interfaces that speed up development cycles.

The CUDA ecosystem benefits from extensive Stack Overflow discussions, GitHub repositories, and community tutorials. When developers encounter issues, solutions typically exist within the vast knowledge base accumulated over nearly two decades of widespread adoption.

ROCm's developer experience requires more hands-on engineering effort. While the HIP programming guide provides detailed documentation, developers often need to dig into source code for advanced optimization techniques.

Migration: Moving from CUDA to ROCm

Migrating from CUDA to ROCm has become much more manageable thanks to AMD's HIP framework. The HIP porting guide shows how most CUDA code requires minimal changes, with HIP deliberately mimicking CUDA's API structure.

The HIPIFY tool also automates much of the conversion process. This utility translates CUDA function calls to HIP equivalents, handling routine conversions like cudaMalloc to hipMalloc automatically. Most kernel code remains unchanged since HIP preserves CUDA's programming model.

HIP's design philosophy focuses on making CUDA migration as painless as possible, with many applications requiring changes to less than 5% of their codebase.

Start migration with thorough assessment of your existing CUDA dependencies. Libraries like cuDNN have ROCm equivalents (MIOpen), but performance characteristics may differ. Testing environments should mirror production hardware to identify performance regressions early.

The biggest migration challenges involve specialized CUDA libraries without direct ROCm equivalents. Custom kernel optimizations may need rework for AMD's architecture, particularly memory access patterns optimized for NVIDIA's cache hierarchy.

Many teams adopt hybrid approaches, maintaining CUDA codebases while developing ROCm branches for cost-sensitive deployments.

Which GPU Computing Solution Should You Choose?

Choose CUDA when performance and ecosystem maturity matter most. Enterprise production workloads, time-sensitive research projects, and teams needing maximum framework compatibility should continue to rely on NVIDIA's proven solution.

ROCm is ideal for teams who care about cost efficiency or open-source principles. Its transparency and expanding ecosystem generally appeal to organizations seeking vendor diversity or more control over their compute environments, and AMD’s progress in narrowing the performance gap makes ROCm an increasingly credible choice.

Ultimately, your choice between ROCm and CUDA comes down to your priorities: performance leadership and stability with rich community support (CUDA), or cost optimization and open-source principles with greater flexibility, at the cost of extra setup and increased technical fluency (ROCm).

Rather than choosing in the abstract, the best approach is to run both frameworks against your own workloads. Synthetic benchmarks rarely tell the full story. With Thunder Compute, you can spin up both NVIDIA and AMD instances in seconds. No SSH setup, CUDA installs, or hardware lock-in.

With A100-80GB GPUs starting at $0.78/hr and H100 or MI300X options available on demand, you can directly compare ROCm and CUDA performance at up to 80% lower cost than AWS or Google Cloud. Our integrated VS Code access, persistent storage, and snapshots also make it easy to test, iterate, and preserve results without infrastructure overhead.

FAQ

Is ROCm performance comparable to CUDA for modern AI workloads?

In most machine learning tasks, CUDA still leads by 10-30%, but the gap has narrowed dramatically. ROCm now performs competitively on memory-bound and open-source workloads, particularly on AMD’s latest MI300X GPUs.

What are the key trade-offs between CUDA and ROCm?

CUDA offers greater ecosystem maturity, broader framework support, and faster setup. ROCm offers open-source flexibility, lower hardware costs, and fewer vendor lock-in risks, but typically requires more engineering expertise.

What issues should I expect when migrating from CUDA to ROCm?

Most CUDA code ports cleanly using HIPIFY, but some CUDA libraries (like cuDNN or TensorRT) lack one-to-one ROCm equivalents. Expect extra work tuning kernels, managing dependencies, and verifying performance regressions across drivers and ROCm versions.

Final Thoughts on Choosing Between ROCm and CUDA for GPU Computing

CUDA still leads in performance and ecosystem maturity, while ROCm offers compelling cost savings and open-source flexibility that's hard to ignore. Rather than committing to one solution immediately, consider testing both approaches to understand how they perform with your specific workloads. Thunder Compute makes this comparison process affordable and practical, letting you make data-driven decisions based on real results rather than benchmarks alone.