NVIDIA CUDA Cores: How They Work and Why They Matter (December 2025)

You've probably noticed that your AI models train way faster on a GPU than on your CPU, and if you're curious about the magic behind those lightning-fast CUDA Cores, you're in the right place. These tiny processors make NVIDIA graphics cards powerful parallel computing engines.

TLDR:

- With thousands of cores working simultaneously, CUDA Cores allow massive parallel processing, making AI training up to 10 or even 20 times faster than with CPUs.

- More CUDA Cores don't guarantee better performance: memory bandwidth, architecture, and Tensor Cores often matter more for AI workloads.

- CUDA Cores handle general-purpose GPU computations like activation functions and gradient calculations, while Tensor Cores accelerate matrix operations in modern AI training.

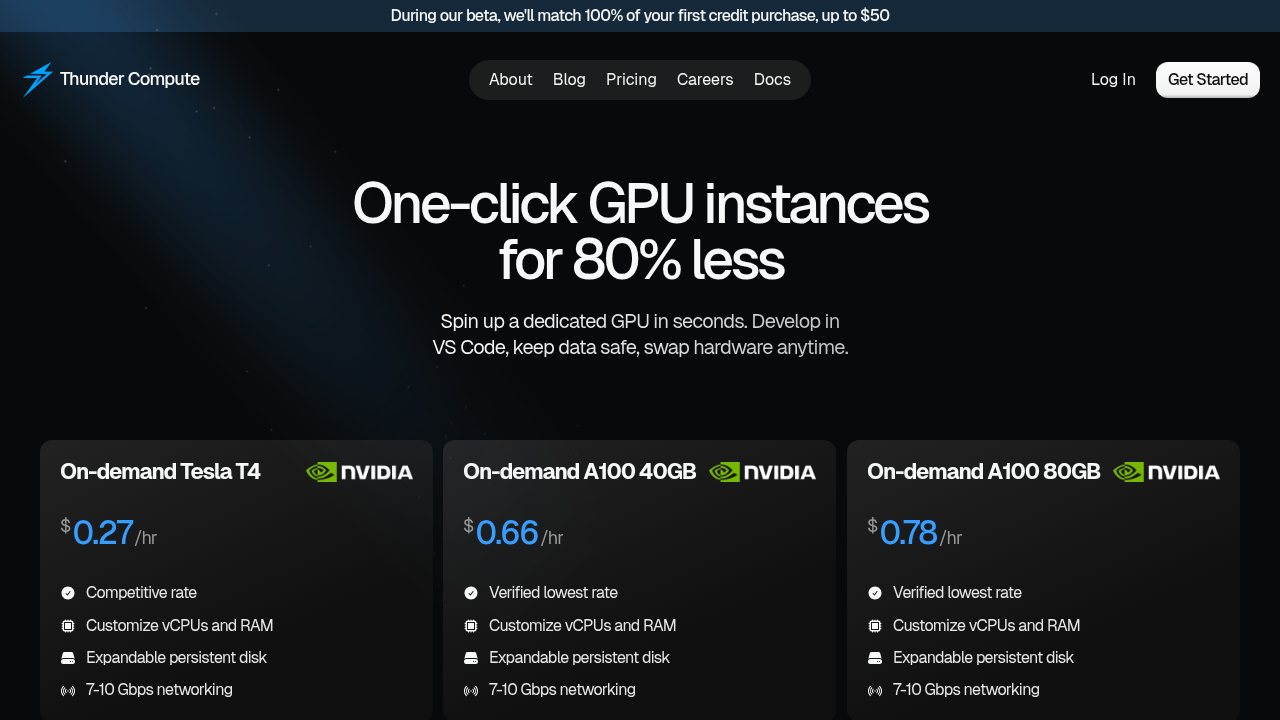

- Thunder Compute offers CUDA-powered GPU instances starting at $0.27 per hour, which is 80% less expensive than AWS with instant VS Code integration.

What Are CUDA Cores?

CUDA Cores are the fundamental processing units inside NVIDIA GPUs; they handle parallel computations.

"CUDA" stands for "Compute Unified Device Architecture." It's NVIDIA's proprietary parallel computing framework, which lets software tap into GPU power for tasks beyond graphics display. Each CUDA Core can execute basic arithmetic operations like addition, multiplication, and floating-point calculations.

While your CPU might have 8 to 16 cores optimized for complex sequential tasks, a single GPU can contain thousands of CUDA Cores designed for simple, repetitive operations. An RTX 4090 has 16,384 CUDA Cores. That's a lot of parallel processing power.

CUDA Cores changed GPUs from graphics-only devices into general-purpose computing engines. Developers quickly realized their potential for scientific computing, cryptocurrency mining, and eventually machine learning. The parallel nature of CUDA Cores makes them incredibly efficient at tasks that can be broken down into many smaller, independent calculations.

The magic happens when you have workloads that benefit from parallelization. Instead of processing data sequentially like a CPU, CUDA Cores can tackle thousands of operations simultaneously. This is why choosing the right GPU for AI workloads makes such a dramatic difference in training times.

Each CUDA Core typically operates at a lower clock speed than CPU cores, but the sheer number of them working together creates massive computational throughput. When you're training a neural network with millions of parameters, having thousands of cores crunching numbers simultaneously beats having a few fast cores working one at a time.

CUDA Cores vs Tensor Cores

CUDA Cores and Tensor Cores serve different purposes in NVIDIA's GPU architecture, and understanding their differences helps you optimize your workloads.

CUDA Cores are the generalists. They handle standard floating-point operations, integer math, and general-purpose parallel computing tasks. Every NVIDIA GPU since 2006 has included CUDA Cores.

Tensor Cores are the specialists. Introduced with the Volta architecture in 2017, these cores are built for deep learning operations. They excel at matrix multiplications using mixed-precision formats like FP16, BF16, and INT8.

| Feature | CUDA Cores | Tensor Cores |

|---|---|---|

| Purpose | General parallel computing | Deep learning matrix operations |

| Precision | FP32, FP64, INT32 | FP16, BF16, INT8, TF32 |

| Performance | Good for diverse workloads | Exceptional for AI training/inference |

| Availability | All NVIDIA GPUs since 2006 | RTX 20-series and newer, data center GPUs |

The performance difference is substantial for AI workloads. Tensor cores can deliver training speeds for neural networks that are up to 20 times faster than CUDA cores alone. They achieve this by performing fused multiply-add operations on 4x4 matrices in a single clock cycle.

That said: CUDA and Tensor aren't competitors. They're teammates working together in your GPU. During AI training, Tensor Cores handle the heavy matrix multiplications in forward and backward passes, while CUDA Cores manage data preprocessing, activation functions, and other operations that don't fit Tensor Cores' specialized design.

For gaming, CUDA Cores still do most of the work. Tensor Cores primarily contribute to DLSS (Deep Learning Super Sampling) and ray tracing denoising. This is why GPU cloud services often feature both core types when describing their AI features.

For modern AI development, you want both types working together for optimal performance.

Do More CUDA Cores Equal Better Performance?

Not necessarily, and this misconception leads to poor hardware decisions.

CUDA Core count matters only if your workload can actually use all those cores effectively. Many applications hit bottlenecks elsewhere in the system before maxing out core utilization. Memory bandwidth, cache size, and architectural improvements often have bigger impacts on real-world performance.

Compare the RTX 4080 and the RTX 3090. The 3090 has 10,496 CUDA Cores while the 4080 has 9,728. Despite having fewer cores, the 4080 often outperforms the 3090, thanks to its newer Ada Lovelace architecture, higher clock speeds, and improved memory subsystem.

For AI workloads, Tensor Core count and memory capacity matter more than raw CUDA Core numbers. An A100 with 6,912 CUDA Cores will outperform an RTX 3090 with 10,496 CUDA Cores in deep learning tasks because of its 432 Tensor Cores and 40GB of HBM2e memory.

Here are the factors that actually determine GPU performance:

- Memory bandwidth: How fast data moves between GPU memory and cores.

- Cache hierarchy: How well the GPU accesses frequently used data.

- Clock speeds: How fast individual cores operate.

- Architectural design: How well the GPU schedules and executes work.

- Memory capacity: Whether your dataset fits in GPU memory.

GPU performance is multidimensional. A GPU with fewer, more efficient cores and better memory systems will outperform one with more cores but architectural limitations.

This is why referral programs for cloud GPU services often focus on testing different hardware configurations rather than just picking the highest core count. Real-world performance testing beats spec sheet comparisons every time.

CUDA Cores for AI and Machine Learning

The parallel nature of CUDA Cores perfectly matches the mathematical structure of machine learning. Training a neural network involves millions of similar calculations across different data samples and model parameters. Instead of processing these sequentially, CUDA Cores can handle thousands simultaneously.

During neural network training, CUDA Cores accelerate several critical operations, including:

- Data preprocessing: Changing raw datasets into training-ready formats.

- Forward passes: Computing predictions through network layers.

- Gradient computation: Calculating how to update model weights.

- Inference serving: Processing user requests in production systems.

For inference workloads, CUDA Cores allow real-time AI applications. Whether you're running a chatbot, image recognition system, or recommendation engine, CUDA Cores accelerate model inference computations for real-time applications.

The combination of CUDA Cores and Tensor Cores creates a powerful AI acceleration system. Tensor Cores handle the core matrix multiplications in transformer attention mechanisms and convolutional layers, while CUDA Cores manage everything else in the pipeline.

This is why cloud GPU access has become important for AI development. Training state-of-the-art models requires thousands of CUDA Cores working for days or weeks. Our blog covers different strategies for optimizing AI workloads across different GPU configurations.

Small teams and individual researchers can now access the same parallel processing power that was once exclusive to tech giants with massive hardware budgets.

Why Thunder Compute Is the Best GPU Cloud for CUDA Workloads

Thunder Compute delivers the most cost-effective access to CUDA-powered GPUs in the cloud. We've built our service for developers who need serious computing power without the traditional cloud complexity or pricing.

Our pricing starts at $0.27 per hour for GPU instances, which is roughly 80% lower than AWS and other major cloud providers. With Thunder Computer, you can spin up an A100 with 6,912 CUDA Cores and 432 Tensor Cores for under $0.78 per hour. That same configuration costs over $3/hour on AWS.

But low prices don't mean compromising on developer experience. We've integrated directly with VS Code, so you can launch a GPU instance and start coding in seconds. No SSH keys, no CUDA driver installations, no complex setup procedures.

Here's what makes Thunder Compute ideal for CUDA workloads:

- Instant deployment: Launch GPU instances in seconds, not minutes.

- Persistent storage: Your data and environment survive instance restarts.

- Hardware swapping: Change GPU types without losing your work.

- VS Code integration: Develop on-cloud GPUs as if they were local machines.

Our instances come with CUDA pre-installed and optimized. Whether you're using PyTorch, TensorFlow, or custom CUDA kernels, everything works out of the box, so you can focus on your AI development instead of infrastructure management.

The ability to swap hardware configurations sets us apart from traditional cloud providers. Start prototyping on a T4 with 2,560 CUDA Cores, then upgrade smoothly to an H100 with 14,592 CUDA Cores when you're ready for serious training. Your code, data, and environment stay exactly the same.

We've also eliminated the typical cloud GPU pain points. No capacity shortages, no complex billing structures, no vendor lock-in. You pay only for what you use, with transparent per-hour pricing and the ability to stop instances when not needed.

If you're building AI products, Thunder Compute provides the CUDA computing power you need at prices that make experimentation affordable. Whether you're fine-tuning LLMs, training computer vision models, or running inference at scale, our GPU instances deliver the parallel processing performance your workloads demand.

FAQ

How many CUDA cores do I need for AI training?

The number of CUDA cores you need depends on your specific workload and dataset size. For small experiments, a GPU with 2,000 to 3,000 CUDA Cores (like a T4) works well, while large-scale training benefits from 10,000 or more cores (like an RTX 4090 or A100). Memory capacity and Tensor Cores often matter more than raw CUDA core count for deep learning tasks.

What's the main difference between CUDA Cores and Tensor Cores for AI workloads?

CUDA Cores handle general parallel computing tasks like data preprocessing and activation functions, while Tensor Cores specialize in the matrix multiplications that dominate neural network training. Both work together to accelerate your complete machine learning pipeline.

Why doesn't my GPU with more CUDA Cores always perform better?

CUDA core count is just one factor in GPU performance. Memory bandwidth, architectural design, clock speeds, and cache design often have bigger impacts on real-world performance.

How do I get started with CUDA development without buying expensive hardware?

Cloud GPU services like Thunder Compute let you access CUDA-powered instances starting at $0.27 per hour with pre-installed drivers and development tools. You can experiment with different GPU configurations and scale up as needed without the upfront hardware investment or setup complexity.

Final Thoughts on CUDA Cores and Their Impact on Computing

CUDA Cores and the CUDA programming model have fundamentally changed how we approach parallel computing, turning graphics cards into AI powerhouses that can handle thousands of calculations simultaneously. Whether you're training your first neural network or scaling up production AI workloads, understanding how these cores work together with Tensor Cores gives you a real advantage in optimizing performance. Thunder Compute makes it easy to experiment with different CUDA-powered configurations, without the upfront hardware costs. The future of AI development depends on accessible parallel computing power, and CUDA Cores are leading that charge.