Runpod vs. CoreWeave: Who is better and cheaper?

The GPU cloud computing market has exploded as AI workloads require ever-more scalable infrastructure, with each provider taking a unique path to serve developers and enterprises. RunPod champions container-based, serverless simplicity, while CoreWeave targets large, enterprise Kubernetes deployments, two fundamentally different approaches to GPU computing.

Whether your team prioritizes pricing, deployment complexity, or developer experience will determine which fits your needs and budget. Here’s a real-world comparison of these platforms (and what we think is the best platform overall) on cost, usability, and performance to help you choose the right GPU cloud.

TLDR:

- RunPod uses Docker-based pods (some container familiarity helps), while CoreWeave is Kubernetes-native and best suited for teams with DevOps experience.

- Thunder Compute offers one-click VS Code integration and hardware swapping without extra fees.

- CoreWeave targets enterprises with complex sales processes. RunPod has various reliability issues.

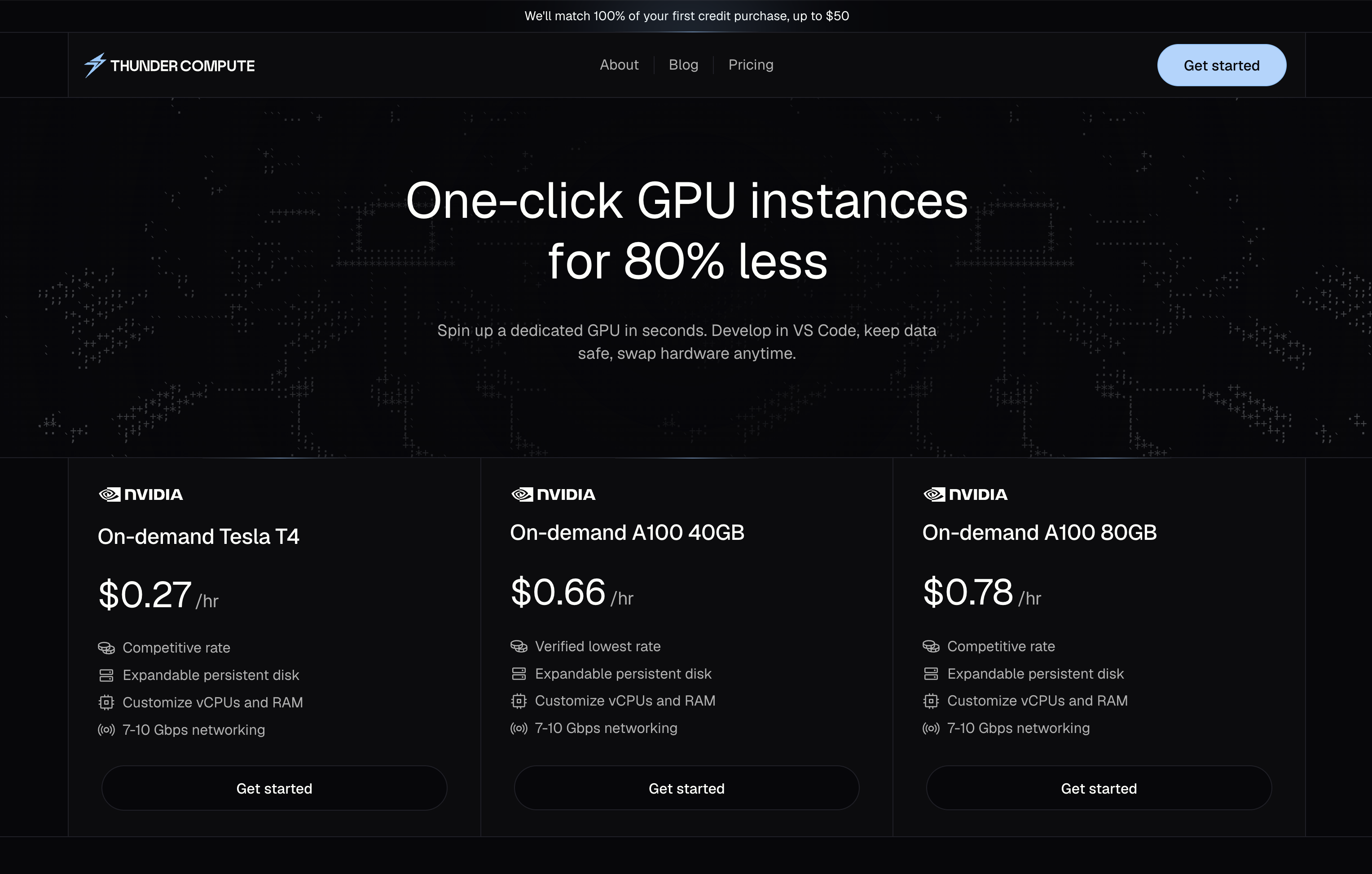

- Thunder Compute costs ~35–40% less than RunPod ($0.78/hr vs. $1.19/hr for A100 80GB). A100 40GB instances are $0.66/hr and H100 80GB instances are $1.89/hr, all with transparent, all-inclusive pricing.

What CoreWeave Does and Their Approach

CoreWeave positions itself as an enterprise-focused GPU cloud provider targeting large-scale AI deployments and organizations with substantial compute requirements. Its infrastructure is built on a Kubernetes-native foundation, emphasizing bare metal GPU access and high-performance cluster computing.

Using CoreWeave effectively typically requires considerable technical expertise. Most deployments happen through container orchestration (Kubernetes/Slurm) using YAML and infrastructure manifests, assuming users are deeply familiar with cloud-native infrastructure and DevOps practices.

CoreWeave’s pricing offers both on-demand and reserved instance options, but the best rates for large-scale deployments generally come via longer-term commitments and direct sales engagements. The pricing comparison data reflects a platform aimed at volume customers rather than casual or individual developers.

The platform features high-performance networking, including InfiniBand, for multi-node, distributed training jobs, making it well-suited for highly parallel, cluster-scale workloads.

CoreWeave’s primary market is well-funded AI labs, large enterprises, and organizations with dedicated DevOps teams. While on-demand usage is possible, smaller teams or those seeking fast prototyping may encounter barriers due to resource minimums and required expertise.

The complexity trade-off delivers major advantages for production-scale deployments, but it creates friction for rapid development and experimentation (not ideal when you just want to test something quickly).

What RunPod Does and Its Approach

RunPod is a flexible cloud GPU platform built for developers, ML practitioners, and AI startups who need affordable, pay-as-you-go access to high-performance GPUs without enterprise bureaucracy. Its approach centers around serverless simplicity, transparent pricing, and global reach.

RunPod lets users spin up GPU pods, dedicated, containerized GPU environments, across over 30 global regions using per-second billing. With a wide variety of NVIDIA and AMD GPUs (H100, A100, RTX 4090, MI300X, and more), users can select the best hardware for their needs and switch dynamically as workloads change.

Every pod runs in an isolated Docker container, allowing for full root access and complete customization. You can use ready-to-go ML templates or your own images for reproducible environments and easy scaling

RunPod caters to AI enthusiasts, small dev teams, and even large ML labs, balancing the cost advantages of community compute with optional enterprise-grade stability. While its container/Docker foundation offers ultimate control and flexibility, new users should be comfortable with command-line or container-based workflows.

Billing is per second or per hour, with no volume minimums, reservations, or negotiation required. Community Cloud pods are especially budget-friendly, making RunPod ideal for experimentation, rapid prototyping, or short-term jobs.

More importantly, the Community Cloud model, while cheap, can suffer from inconsistent performance, hardware interruptions, and reliability issues not typically seen with dedicated or enterprise-oriented clouds. Users running long jobs or requiring a fully integrated IDE workflow (such as one-click VS Code, hardware swapping, or seamless project snapshots as in Thunder Compute) may find RunPod less convenient and potentially disruptive for production workloads.

Pricing Comparison

The cost differences between these providers reveal stark contrasts in their target markets and value propositions. Let's break down the actual numbers you'll pay for common GPU instances.

| GPU Type | RunPod | CoreWeave | Thunder Compute |

|---|---|---|---|

| A100 80GB | $1.19-$1.64/hr | Contact Sales | $0.78/hour |

| H100 80GB | $2.19-$2.74/hr | Contact Sales | $1.89/hour |

| A100 40GB | $0.89/hr | Contact Sales | $0.66/hour |

RunPod's per-second billing model sounds flexible, right? But here's the catch: those hourly rates add up quickly for longer training jobs. Their H100 instances are typically between $2.19 and $2.74/hr, meaning a 24-hour training run costs nearly $48, while the same workload on Thunder Compute costs around $35.

CoreWeave's enterprise focus is evident in its pricing approach. Most competitive rates require reserved capacity discussions and volume commitments, which create uncertainty for budget planning and make CoreWeave impractical for smaller projects or variable workloads.

Thunder Compute delivers transparent, consistent pricing without hidden fees or complex tier structures. Our A100 GPU pricing analysis shows that we consistently beat competitors by 40-60% on equivalent hardware.

The savings compound over time. A team running continuous development workloads could save thousands monthly by switching to Thunder Compute. These aren't promotional rates or limited-time offers: our software-driven approach allows sustainable low pricing.

And don't forget about hidden expenses, they're everywhere. RunPod charges separately for persistent storage and premium support. CoreWeave's enterprise model often includes consulting fees and minimum commitments. Thunder Compute includes persistent storage, snapshots, and full feature access at no additional cost.

Performance and Reliability Considerations

Infrastructure reliability and performance differ greatly across these providers due to their hardware management philosophies and target markets.

RunPod's performance varies with tier selection. "Community" cloud instances offer competitive pricing but can be subject to inconsistent performance, variable availability, and occasional interruptions, as supply is crowdsourced and not always enterprise-hardened. Their "Secure Cloud" tier is more stable, but at a higher hourly cost. Multi-region support enables broad geographic selection, but performance and reliability can vary from region to region.

RunPod's spot instance model can offer significant cost savings, but with an increased risk of interruptions, posing challenges for long-running jobs unless rigorous checkpointing is implemented. This adds complexity for users running extended or critical ML workloads.

CoreWeave delivers enterprise-grade uptime, robust networking (including InfiniBand for multi-node, distributed training), and reliable cluster management, making it a strong fit for high-availability production workloads. Access to these advanced features typically requires enterprise contracts or commitments, and Kubernetes-native orchestration, while powerful, introduces potential configuration issues that require skilled DevOps support to resolve.

Thunder Compute ensures consistent performance by combining software-driven orchestration with dedicated hardware and 7–10 Gbps networking, offering rapid data transfer and robust checkpointing. The platform supports both prototyping (cost-minimized) and production (SLA-backed) modes: prototyping mode offers affordable resources for development, while production mode provides enterprise-level uptime guarantees without requiring enterprise contracts or negotiations.

Users benefit from instant access, flat pricing, and reliable GPU allocation for both short and long-term workloads, eliminating the need for lengthy procurement or sales cycles. Thunder Compute's platform and market positioning allow it to deliver reliability and affordability through software optimization rather than simply hardware over-provisioning.

The RunPod alternatives market research consistently shows that higher reliability on other platforms often introduces added complexity or higher costs. Thunder Compute addresses this by providing a simpler, software-first solution.

What Thunder Compute Does and Our Approach

Thunder Compute takes a different approach to GPU cloud computing, focusing on simplicity and cost-effectiveness above all else. We provide on-demand GPU instances with one-click deployment, native VS Code integration, and persistent storage at prices up to 80% lower than major cloud providers.

Instead of requiring Docker expertise or complex configurations, Thunder Compute offers instant deployment with full development environment integration. You can launch a GPU instance and start coding in VS Code within seconds.

What sets us apart is our unique feature set that works smoothly without additional fees. Hardware swapping lets you change GPU types without losing your environment. Snapshots preserve your entire workspace state for easy restoration or sharing. Start/stop functions mean you only pay for active compute time while maintaining persistent storage.

Thunder Compute provides full control with complete root access, so you can install any libraries, frameworks, or dependencies just like a local machine. Our high-speed networking delivers 7-10 Gbps throughput for fast data transfer and model checkpointing.

The cheapest cloud GPU providers often sacrifice reliability or features, but Thunder Compute delivers both affordability and enterprise features without compromise.

Thunder Compute as the Superior Choice

After looking at RunPod and CoreWeave across pricing, usability, and performance dimensions, Thunder Compute stands out as the clear winner for most GPU computing needs. We've removed the fundamental trade-offs that force users to choose between cost, simplicity, and reliability.

RunPod users often struggle with the complexity of container management and variable performance across their tiers. Thunder Compute delivers enterprise performance with startup-friendly pricing and consumer-grade simplicity.

Our unique software-driven approach means you're getting cheaper GPU access and a fundamentally better experience. Hardware swapping, instant VS Code integration, and persistent storage aren't add-on features you pay extra for. They're core features that make GPU computing actually enjoyable.

The numbers speak for themselves. Thunder Compute offers A100 80GB instances at $0.78/hr and A100 40GB instances at $0.66/hr (roughly 35-45% less than RunPod depending on configuration) with transparent pricing and no enterprise sales overhead.

But cost savings mean nothing if the service doesn't work reliably. Thunder Compute's production mode delivers the uptime guarantees that serious projects require, while our prototyping mode offers even lower costs for development work. You get the flexibility to match your reliability needs to your budget without complex tier structures.

For developers, researchers, and startups who need reliable GPU computing without breaking their budget or spending weeks learning Kubernetes, Thunder Compute represents the obvious choice. We've built the GPU cloud service we wished existed when we were struggling with expensive, complex alternatives.

FAQs

Q: What's the main difference between RunPod and CoreWeave's pricing models?

A; RunPod offers transparent per-second (and per-hour) billing, with standard rates around $1.19/hr for A100 80GB Community instances (some regions/tiers can be higher). CoreWeave typically requires direct sales discussions and volume or reserved commitments for its most competitive pricing, and standard on-demand rates are less prominently advertised.

Q: How do I choose between prototyping and production modes on Thunder Compute?

A: Use prototyping mode for development, experimentation, and jobs where the lowest possible cost is the priority and some reliability trade-off is acceptable. Production mode should be chosen for long-running jobs or mission-critical deployments where enterprise-level uptime SLAs and reliability guarantees are required.

Q: Can I switch GPU types without losing my development environment?

A: Yes, Thunder Compute’s hardware swapping feature enables changing GPU types (e.g., A100 to H100 or vice versa) without spinning up a new instance or losing your data/workspace. This is not currently available on RunPod or CoreWeave, both of which require new instance creation (and potentially data migration) when changing hardware

Q: Why does CoreWeave require Kubernetes expertise while other providers don't?

A: CoreWeave is designed for enterprise teams familiar with Kubernetes and cloud-native workflows, reflecting its focus on advanced orchestration and high-performance cluster management. RunPod uses Docker containers, a simpler setup, but it still requires some container knowledge. Thunder Compute removes most technical overhead with true one-click VS Code integration and no container/orchestration setup needed.

Final thoughts on choosing the right GPU cloud provider

The GPU cloud computing world doesn't have to be complicated when you know what to look for. While RunPod struggles with reliability issues and CoreWeave locks you into enterprise complexity, the math is pretty straightforward when you compare actual costs and usability. Your choice comes down to whether you want to spend time managing infrastructure or actually building AI models. Thunder Compute gives you both