Thunder Compute vs Lambda (December 2025)

When choosing between GPU cloud providers for AI training, most teams focus on obvious factors like pricing and hardware specs, but miss important decision points that determine long-term success. The real evaluation framework should consider developer workflow integration, infrastructure flexibility, and hidden costs that compound over time. Finding the best GPU platform requires understanding these deeper trade-offs that aren't immediately apparent in basic feature comparisons. Here's my take on how Thunder Compute and Lambda stack up across the decision factors that actually matter for your AI development workflow.

TLDR:

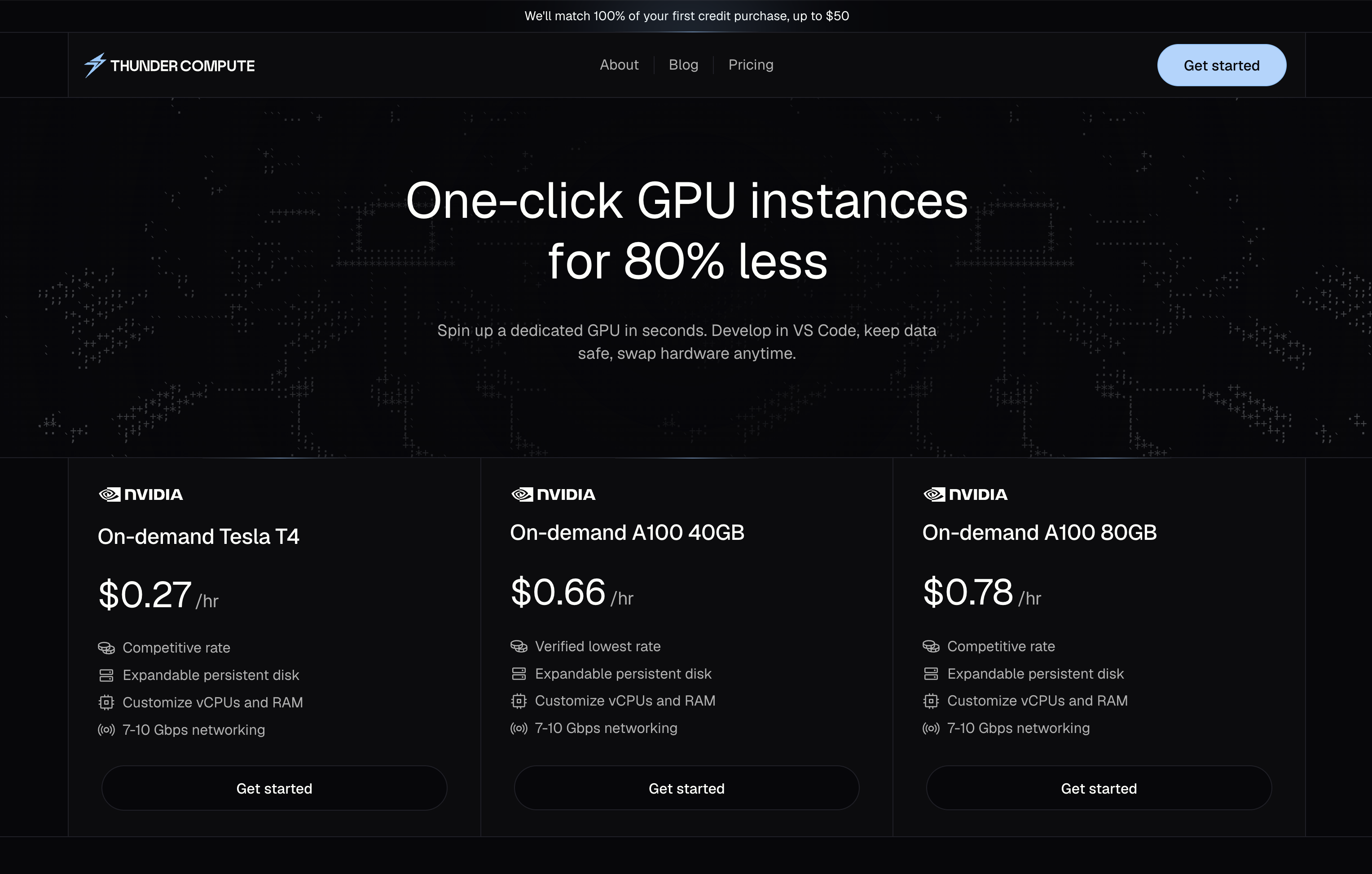

- Thunder Compute costs 50-55% less than Lambda Labs across all GPU types (A100 80GB: $0.78/hr vs $1.79/hr)

- You get persistent storage, snapshots, and VS Code integration by default with Thunder Compute

- Lambda focuses on large enterprise clusters while Thunder optimizes for individual developers

- Thunder lets you swap GPU types without rebuilding your environment or losing data

- Most AI teams save much more with Thunder's approach versus Lambda's premium pricing

What Lambda Does and Their Approach

Lambda Labs positions itself as the only cloud provider focused solely on AI, targeting researchers and enterprise customers who need GPU instances for training and fine-tuning AI models. They offer on-demand compute and clusters featuring NVIDIA HGX B200 starting at $2.99, HGX H200, H100 starting at $1.85, A100, GH200, and serverless inference.

Their infrastructure approach focuses on providing 1x, 2x, 4x & 8x NVIDIA GPU instances available with launch, terminate and restart features through a developer-friendly API. Lambda instances come pre-configured with Ubuntu, TensorFlow, PyTorch, NVIDIA CUDA, and NVIDIA cuDNN ready to go with Lambda Stack. They offer pay-by-the-minute billing with no egress fees.

Lambda's target market skews toward larger organizations and research institutions that need multi-GPU setups for substantial training workloads. Their messaging highlights enterprise-grade infrastructure and AI-specific tooling.

Thunder Compute offers a similar approach to on-demand GPU computing but with a focus on cost-effectiveness and developer experience optimization. More on that below.

What Thunder Compute Does and Our Approach

Thunder Compute provides on-demand GPU instances for AI/ML workloads with up to 80% lower cost than AWS or GCP while delivering a smooth user experience for machine learning developers. We offer dedicated GPU servers with 1-4 GPUs per instance, one-click launch accessible directly through VS Code, persistent storage, and developer-friendly features.

Our approach focuses on software-driven GPU orchestration that allows ultra-low pricing while maintaining reliability and ease of use. Thunder Compute focuses on on-demand instances for startups, research, and prototyping, running dedicated A100 hosts in U.S. data centers and letting you spin up an on-demand instance in 30 seconds.

We target ML engineers, researchers, and AI-driven startups who need affordable, scalable GPU computing for development and prototyping. This includes solo ML researchers, AI consulting firms, academic labs, and small-to-mid size tech companies that require on-demand GPU power for tasks like model training, fine-tuning, and inference.

Thunder Compute's software-first approach allows us to deliver better developer experience at lower cost compared to hardware-focused competitors.

The key difference lies in our focus on individual developers and smaller teams rather than large enterprise clusters. We've built our service around the needs of teams that want to rent GPUs without the complexity and cost overhead of traditional cloud providers.

Pricing Comparison

The pricing differences between Thunder Compute and Lambda Labs are substantial across all GPU types. Here's how the costs break down:

| GPU Type | Thunder Compute | Lambda Labs | Savings |

|---|---|---|---|

| A100 40GB | $0.66/hr | $1.29/hr | 55% |

| A100 80GB | $0.78/hr | $1.79/hr | 56% |

| H100 80GB | $1.47/hr | $3.29/hr (single) / $2.99/hr (8-GPU) | 55-51% |

Both services bill per minute, so you're not paying for long idle blocks if you're spinning instances up and down frequently. This flexibility matters when you're doing iterative development or running experiments with varying durations.

Storage costs also favor Thunder Compute. We include persistent storage at $0.15/GB/month, while Lambda charges $0.20/GB/month for filesystem storage. When you're working with large datasets or model checkpoints, these storage savings add up quickly.

The cost advantage becomes even more pronounced for teams running multiple experiments or keeping development environments online. Thunder Compute's pricing structure is designed around the reality that most AI development involves frequent iteration and experimentation.

For context, our A100 pricing represents some of the lowest rates available in the GPU cloud market, making high-end hardware accessible to smaller teams and individual researchers.

Hardware and Infrastructure

Lambda offers access to NVIDIA H100, A100 & A10 Tensor Core GPUs with additional instance types including NVIDIA GH200 Superchip, NVIDIA RTX A6000, RTX 6000 & NVIDIA V100 Tensor Core GPU. Their strength lies in 8× GPU SXM nodes (A100/H100) with NVLink, which excels for large-scale training where you need high interconnect bandwidth.

Lambda's NVIDIA Quantum-2 InfiniBand networking provides 3200 Gbps of bandwidth for each HGX B200, H200 or H100 node. This infrastructure is purpose-built for NVIDIA GPUDirect RDMA with maximum inter-node bandwidth and minimum latency across entire clusters.

Thunder Compute provides on-demand access to NVIDIA T4, A100 40GB, A100 80GB, and H100 GPUs with up to 4 GPUs per instance. Our instances include 7-10 Gbps networking throughput and hardware swapping features that allow you to change GPU types on-demand without rebuilding your environment.

The fundamental difference is architectural philosophy. Lambda optimizes for large multi-node clusters and maximum interconnect performance. Thunder Compute focuses on single-node performance optimization and flexibility.

For teams that need to scale beyond single nodes or require ultra-high bandwidth between GPUs, Lambda's InfiniBand networking provides clear advantages. However, most AI development and training workloads don't require this level of interconnect performance.

Thunder Compute's approach of optimizing single-node performance while allowing easy hardware changes serves the majority of use cases more cost-effectively. Our guide on choosing the right GPU covers when you might need different hardware configurations.

Developer Experience and Features

Lambda provides launch, terminate and restart instances through an API and allows you to connect to NVIDIA GPU instances directly from your browser. In seconds, you can access a dedicated Jupyter Notebook development environment for every machine directly through the cloud dashboard.

For immediate access, you can use the Web Terminal within the dashboard or connect via SSH using your provided SSH keys. The service offers a solid assortment of GPUs with high performance-to-price ratios, but the implementation is relatively simple, offering Jupyter notebook access and SSH connectivity.

Thunder Compute provides native VS Code integration, one-click instance setup, and features like persistent storage, snapshots, and hardware hot-swapping. If your dev loop lives in VS Code and you want zero setup friction, Thunder's UX advantage is clear.

Thunder Compute includes persistent storage attached to each instance by default with snapshots and spec changes (RAM, vCPU, storage) that can be made without tearing everything down. This persistent approach means your development environment, installed packages, and data survive instance restarts.

The snapshot feature lets you save your entire instance state at any point and restore or clone it later. This feature is invaluable for experimenting with different configurations or sharing environments across team members.

Thunder Compute lets you hot-swap hardware or upgrade instance resources without a rebuild. This flexibility is ideal for teams that start small and scale gradually, or switch GPU types often based on workload requirements.

The VS Code integration deserves special mention. You can open your Thunder Compute instance as a remote workspace directly in VS Code, editing code, running notebooks, and managing files as if they were local. This smooth integration removes the context switching that comes with browser-based development environments.

Thunder Compute as the Better Choice

For most ML teams optimizing cost and iteration speed, Thunder Compute's pricing and feature set provide a strong edge. The combination of persistent storage, snapshots, and spec modifications creates a development environment that adapts to your workflow rather than limiting it.

You should choose Thunder Compute if you want lower on-demand prices for A100/H100, value per-minute billing with default persistent storage and snapshots, and your team wants fast VS Code integration with minimal setup overhead.

The cost savings alone make Thunder Compute compelling. When you're running multiple experiments or keeping development environments online, the 50%+ savings on GPU costs can be the difference between feasible and prohibitively expensive.

The developer experience advantages compound over time. Features like hardware hot-swapping and persistent storage reduce the friction of iterative development, letting you focus on model improvement rather than infrastructure management.

While Lambda excels at large multi-GPU clusters for enterprise training workloads, Thunder Compute delivers superior value for the majority of AI development scenarios through lower costs, better developer experience, and more flexible hardware management.

If you need flexible, low-cost A100s with no commitment and simple UX, Thunder Compute is the winner.

FAQ

Q: How much can I save by switching from Lambda to Thunder Compute?

A: Thunder Compute offers 50-56% savings across all GPU types compared to Lambda Labs, with A100 80GB instances costing $0.78/hr versus Lambda's $1.79/hr. These savings add up for teams running multiple experiments or keeping development environments online.

Q: What's the main difference between Thunder Compute and Lambda's target users?

A: Lambda focuses on large enterprise clusters and research institutions needing multi-GPU setups, while Thunder Compute targets individual developers, startups, and smaller teams who need affordable, flexible GPU access for development and prototyping work.

Q: Can I change my GPU type without losing my work and environment?

A: Yes, Thunder Compute offers hardware hot-swapping that lets you change GPU types, adjust CPU/RAM, or modify storage without rebuilding your environment. Your files, installed packages, and development state persist through hardware changes thanks to persistent storage.

Q: How does the VS Code integration actually work?

A: Thunder Compute provides a VS Code extension that opens your GPU instance as a remote workspace directly in your editor. You can edit code, run notebooks, and manage files on the cloud GPU as if they were local, eliminating the need for browser-based development or SSH setup.

Q: When should I choose Lambda over Thunder Compute?

A: Choose Lambda if you need large multi-node clusters with 8+ GPUs, require ultra-high bandwidth InfiniBand networking for distributed training, or have enterprise-specific requirements that make the premium pricing worthwhile for maximum interconnect performance.

Final thoughts on choosing between Thunder Compute and Lambda

The math here is pretty straightforward. You're looking at 50%+ cost savings with Thunder Compute while getting better developer tools like VS Code integration and persistent storage by default. Lambda works great if you need massive multi-GPU clusters, but most AI teams benefit more from Thunder's flexible, developer-friendly approach. Your wallet and your workflow will thank you for making the switch.