Is Runpod better than Google Colab Pro?

Both RunPod and Google Colab Pro can handle basic machine learning workloads, but the real differences emerge when you need reliable GPU access for production training or cost-predictable development cycles. Most developers hit frustrating walls with availability issues or confusing pricing models that make project planning nearly impossible. The choice between RunPod vs Google Colab Pro often comes down to whether you prioritize hardware variety or ecosystem integration, but neither solves the core reliability problem.

In this guide, you will learn the key differences in GPU availability, pricing structures, and developer experience to help you choose the platform that actually fits your workflow needs.

TLDR:

- RunPod offers hardware variety but suffers from availability issues and complex setup requirements

- Google Colab Pro uses unpredictable compute units that make cost planning nearly impossible

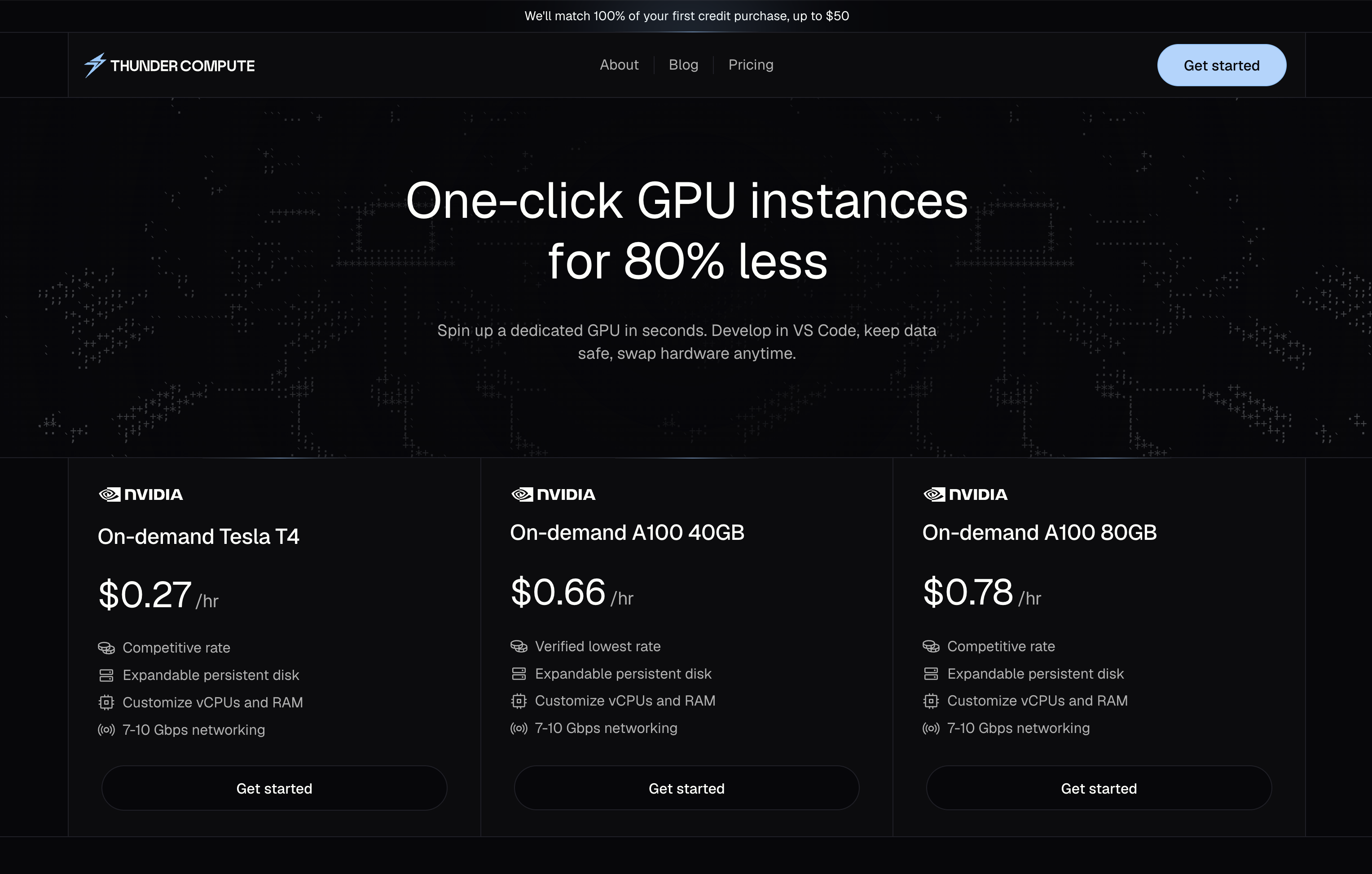

- Thunder Compute provides guaranteed GPU access with A100s starting at $0.66/hr(40GB) vs RunPod's $1.10/hr+ rates

- Thunder Compute offers native VS Code integration and one-click deployment without setup complexity

- Both RunPod and Google Colab Pro lack the reliable resource guarantees that Thunder Compute delivers consistently

What RunPod Does and Their Approach?

RunPod is an end-to-end cloud platform that provides access to powerful, on-demand GPU computing resources specifically for training, fine-tuning, and deploying AI models. Its approach is focused on developer-friendliness, flexibility, and offering an alternative to more complex and expensive traditional cloud providers.

Their core offering includes:

- GPU Pods: Customizable, containerized GPU instances that give developers full control over their environment, including software and networking. Pods can be set up as persistent or ephemeral to match workload needs.

- Serverless GPUs: A pay-per-second, autoscaling service for AI model inference. The platform automatically manages container workers and can scale instantly from zero to thousands of workers to handle demand, which is ideal for "bursty" workloads. FlashBoot technology allows for cold-start times of less than 200 milliseconds.

- RunPod Hub: A centralized hub featuring pre-configured templates for popular AI projects like Stable Diffusion, Large Language Models (LLMs), and video generation. This allows developers to deploy complex models in a single click, without manual configuration.

- Bare Metal: For users who require direct access to hardware and maximum performance, RunPod offers dedicated bare metal GPU clusters with no virtualization overhead.

While RunPod offers flexibility, the complexity of managing different deployment models and uncertain GPU availability can slow down development workflows by a lot.

While RunPod excels in hardware variety, it falls short on ease of use. Thunder Compute shines in that area with its simplified pricing model and guaranteed GPU access.

What Google Colab Pro Does and Its Approach

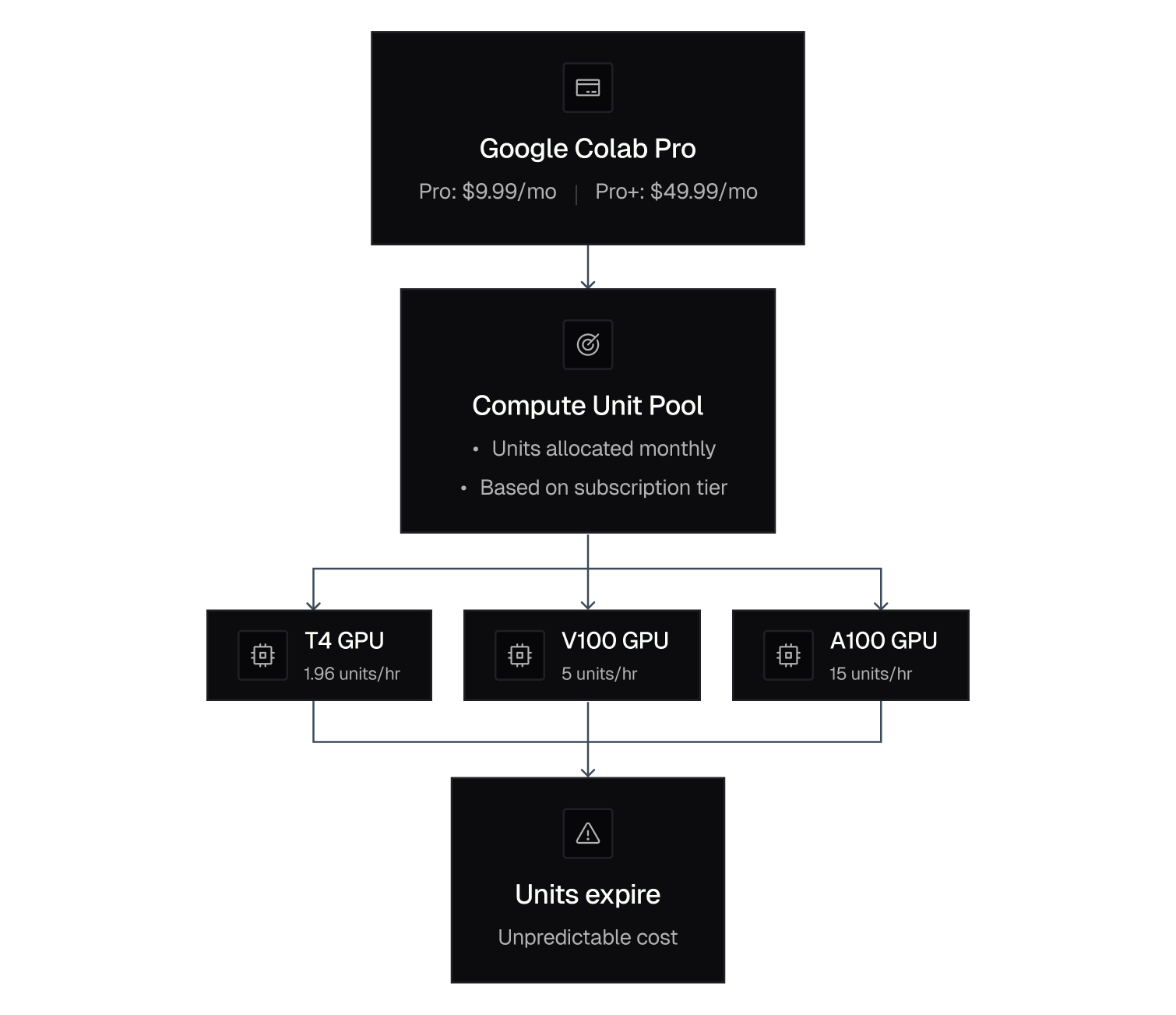

Google Colab Pro enhances the free Colab service through a subscription model that uses compute units for GPU access. Each compute unit costs $0.10, and different GPU types consume varying amounts per hour.

The pricing structure works like this: T4 GPUs consume around 1.6 to 1.96 units per hour (offering about 51 to 62 hours of use with 100 units), V100s require 5 units per hour (about 20 hours of use), and A100s demand 10–15 units per hour (about 6 to 10 hours total) depending on the configuration and measurement source.

You can buy compute units via "Pay As You Go" as well as through a Pro subscription ($9.99/month for 100 units) or Pro+ ($49.99/month for 500 units).

This compute unit system creates unpredictable costs. You might burn through your units faster than expected if assigned a more powerful GPU like the A100, or slower if you get a T4 when you need higher performance.

Google's approach focuses on integration with its ecosystem. Your notebooks sync with Google Drive, and you get smooth access to other Google services. However, this convenience comes with limitations in customization and control over your environment.

The Colab Pro's limitations become clear when you exhaust compute units mid-project. Thunder Compute eliminates this uncertainty with straightforward pricing that doesn't require subscription juggling. You can find other Colab alternatives in our article about Colab alternatives.

GPU Selection and Availability

RunPod wins on hardware variety, offering everything from older K80s to cutting-edge H100s and RTX 4090 cards. You get dedicated access to whatever GPU you select, assuming it's available in their network.

The catch? Availability isn't guaranteed. Popular GPUs like A100s or H100s can be scarce during peak demand, leaving you waiting or settling for less powerful alternatives.

Google Colab Pro takes a different approach with limited but more predictable options. You're restricted to T4, V100, and A100 GPUs, and there's no guarantee you'll get the GPU you want and the resources are shared. The service assigns hardware based on availability and your compute unit balance.

Neither service offers true guaranteed access. Colab Pro's documentation clearly states that even paid subscriptions don't guarantee compute resources.

| Service | GPU Options | Guaranteed Access | Dedicated Resources |

|---|---|---|---|

| RunPod | K80 to H100, RTX, A100, plus consumer/server GPUs | No (varies by mode; full-dedicated available if chosen) | Yes (dedicated when chosen, otherwise community/shared) |

| Colab Pro | T4, V100, A100 | No | No (resources are always shared and not guaranteed) |

| Thunder Compute | T4 to H100 | Yes | Yes (all rented GPUs are dedicated) |

Thunder Compute solves the availability problem with guaranteed GPU access and transparent pricing across our entire fleet. When you need an A100 for deep learning, you get it immediately.

Pricing Models and Cost Structure

RunPod's pricing is straightforward: H100 80GB starts at $1.99/hr and RTX 4090 from $0.34/hr, billed per second so you pay only for actual use.

However, real costs can add up. Network storage and some premium Secure Cloud features carry additional charges. Community Cloud pricing is variable, while Secure Cloud rates are higher but more stable.

Google Colab Pro's compute unit system makes cost prediction nearly impossible. T4s use 1.6–1.96 units/hr, V100s 5 units/hr, and A100s 10–15 units/hr, so your spend varies widely depending on GPU assignments.

Subscription fees ($9.99/mo Pro, $49.99/mo Pro+) are just the start. Heavy users often need more compute units at $0.10 per unit, and unused units expire.

If you always get A100s in Colab Pro, you pay $1.00–$1.50/hr (10–15 units at $0.10/unit), plus your monthly subscription. RunPod’s A100 pricing starts at $1.64/hr (Community Cloud) and may spike in high demand.

Thunder Compute beats all three, with T4s at just 0.27$/hr, A100s at just $0.78/hr(80GB), and H100 for $1.47/hr, without subscriptions, compute units, or hidden fees.

Developer Experience and Workflow Integration

RunPod provides Docker container support and multiple deployment options, but setting it up requires technical expertise. You'll need to configure environments, manage storage, and handle networking yourself.

The service offers many templates and pre-built containers, which help reduce setup time. However, connecting to your preferred development tools requires manual configuration and SSH knowledge.

Google Colab Pro excels in simplicity with its browser-based notebook environment. Everything runs in your browser, and Google Drive is automatically integrated, providing easy sharing features.

The downside is limited customization options and no access to a full development environment. You're stuck with Jupyter notebooks and can't use your preferred IDE or development tools.

RunPod connects to multiple cloud storage providers, including Google Cloud Storage, AWS S3, Azure, and Dropbox. However, this flexibility comes at the cost of complexity in setup and management.

Thunder Compute delivers the best developer experience with native VS Code integration and one-click deployment.

You get persistent storage, snapshot features, and the ability to pause and resume instances without losing work. This makes Thunder Compute ideal for startups and growing teams that need reliable, easy-to-use GPU access.

Thunder Compute as a Better Choice

Both RunPod and Google Colab Pro have fundamental limitations that make them frustrating for serious ML work. RunPod's availability issues and complex setup requirements slow down development, while Colab Pro's compute unit system creates unpredictable costs and limited control.

Thunder Compute tackles these pain points directly. We offer the lowest GPU pricing in the market, with guaranteed resource access and transparent billing. There are no compute units to manage, no availability uncertainty, and no complex setup procedures.

Our VS Code integration means you can develop on remote GPUs as naturally as working locally. Persistent storage keeps your work safe, and our snapshot feature lets you save and restore entire environments instantly.

The reliability difference matters for production workloads. While other services might terminate your instances or run out of capacity, Thunder Compute provides enterprise-grade uptime with startup-friendly pricing.

We've built our infrastructure for ML developers who need predictable access to powerful hardware without the overhead of traditional cloud providers. The comparison data consistently shows Thunder Compute delivering better value across price, performance, and developer experience metrics.

FAQs

Q: How do I avoid unexpected costs when choosing between RunPod and Colab Pro?

A: RunPod bills per second with transparent hourly rates, but watch for additional charges like network storage and data transfer fees. Colab Pro's compute unit system makes costs unpredictable since different GPUs consume units at varying rates, and unused units expire.

Q: Can I get guaranteed GPU access with either RunPod or Colab Pro?

A: No, neither service guarantees GPU availability. RunPod's popular GPUs like A100s can be scarce during peak demand, and Colab Pro explicitly states that even paid subscriptions don't guarantee compute resources.

Q: When should I consider switching from Colab Pro to a dedicated GPU service?

A: If you're consistently burning through compute units quickly, need specific GPU types for your workloads, or require more control over your development environment beyond Jupyter notebooks, a dedicated service will likely be more cost-effective and reliable.

Final thoughts on RunPod versus Google Colab Pro

The GPU access struggle is real when you're trying to get actual work done. RunPod gives you hardware variety but leaves you guessing on availability, while Colab Pro's compute unit system turns budgeting into a guessing game. Thunder Compute eliminates both headaches with guaranteed A100 access starting at $0.66/hr (40GB) and native VS Code integration that works perfectly. Your time is better spent building models than wrestling with infrastructure.